Statistical power is about whether an experiment or study is likely to reveal an effect if it is present. Without a sufficiently ‘powerful’ study, you risk being in the middle ground of ‘not proven’, not being able to make a strong statement either for or against whatever effect, system, or theory you are testing.

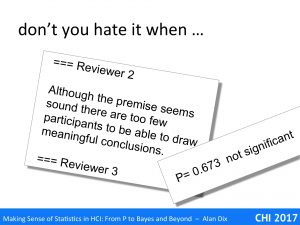

You’ve recruited your participants and run your experiment or posted an online survey and gathered your responses; you put the data into SPSS and … not significant. Six months work wasted and your plans for your funded project or PhD shot to ruins.

How do you avoid the dread “n.s.”?

Part of the job of statistics is to make sure you don’t say anything wrong, to ensure that when you say something is true, there is good evidence that it really is.

This is the why in traditional hypothesis testing statistics, you have such a high bar to reject the null hypothesis. Typically the alternative hypothesis is the thing you are really hoping will be true, but you only declare it likely to be true if you are convinced that the null hypothesis is very unlikely.

Bayesian statistics has slightly different kinds of criteria, but is in the end doing the same things, ensuring you down have false positives.

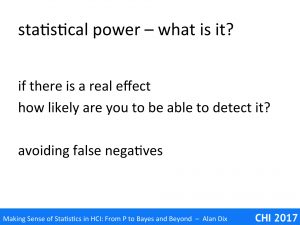

However, you can have the opposite problem, a false negative — there may be a real effect there, but your experiment or study was simply not sensitive enough to detect it.

Statistical power is all about avoiding these false negatives. There are precise measures of this you can calculate, but in broad terms, it is about whether an experiment or study is likely to reveal an effect if it is present. Without a sufficiently ‘powerful’ study, you risk being in the middle ground of ‘not proven’, not being able to make a strong statement either for or against whatever effect, system, or theory you are testing.

(Note the use of the term ‘power’ here is not the same as when we talk about power-law distributions for network data).

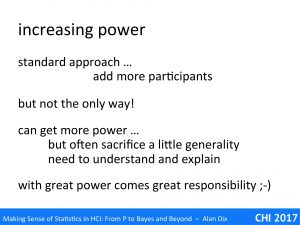

The standard way to increase statistical power is simply to recruit more participants. No matter how small the effect, if you have a sufficiently large sample, you are likely to detect it … but ‘sufficiently large’ may be many, many people.

In HCI studies the greatest problem is often finding sufficient participants to do meaningful statistics. For professional practice we hear that ‘five users are enough‘, but less often that this figure was based on particular historical contingencies and in the context of single formative iterations, not summative evaluations, which still need the equivalent of ‘power’ to be reliable.

Happily, increasing the number of participants is not the only way to increase power.

In blogs over the next week or two, we will see that power arises from a combination of:

- the size of the effect you are trying to detect

- the size of the study (number of trails/participants) and

- the size of the ‘noise’ (the random or uncontrolled factors).

We will discuss various ways in which careful design, selection of subjects and tasks can increase the power of your study albeit sometimes requiring care in interpreting results. For example, using a very narrow user group can reduce individual differences in knowledge and skill (reduce noise) and make it easier to see the effect of a novel interaction technique, but also reduces generalisation beyond that group. In another example, we will also see how careful choice of a task can even be used to deal with infrequent expert slips.

Often these techniques sacrifice some generality, so you need to understand how your choices have affected your results and be prepared to explain this in your reporting: with great (statistical) power comes great responsibility!

However, if a restricted experiment or study has shown some effect, at least you have results to report, and then, if the results are sufficiently promising, you can go on to do further targeted experiments or larger scale studies knowing that you are not on a wild goose chase.