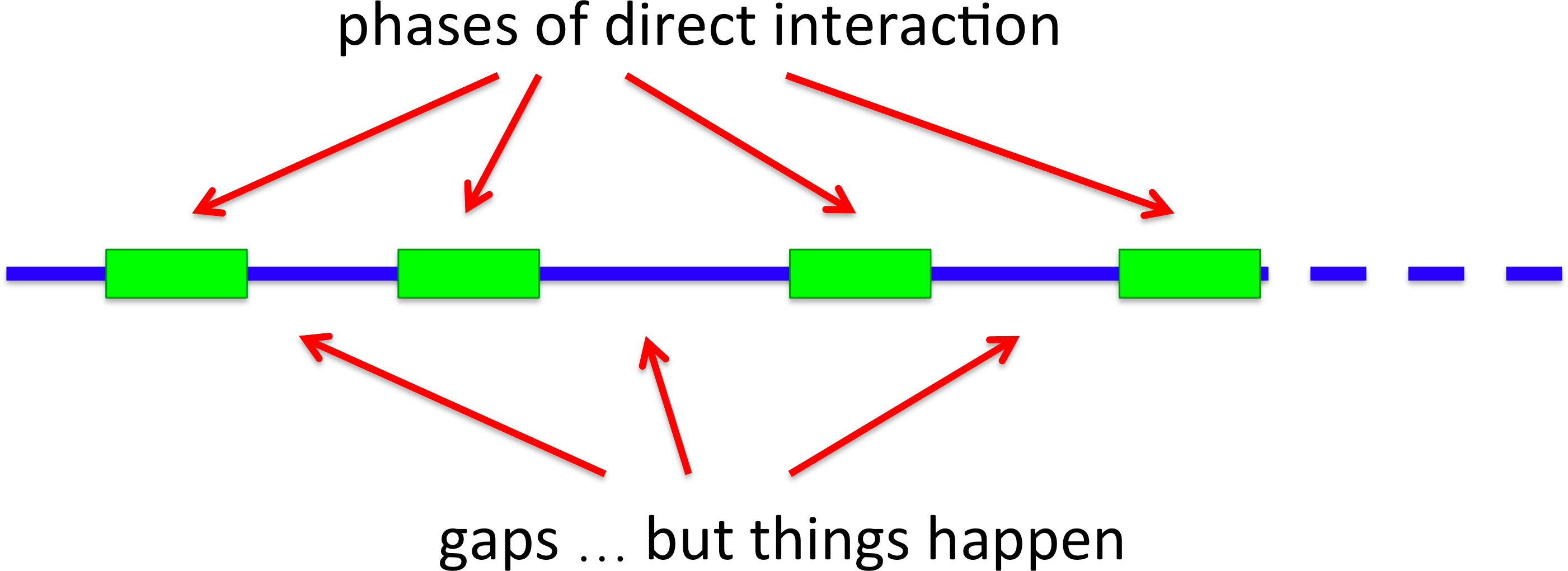

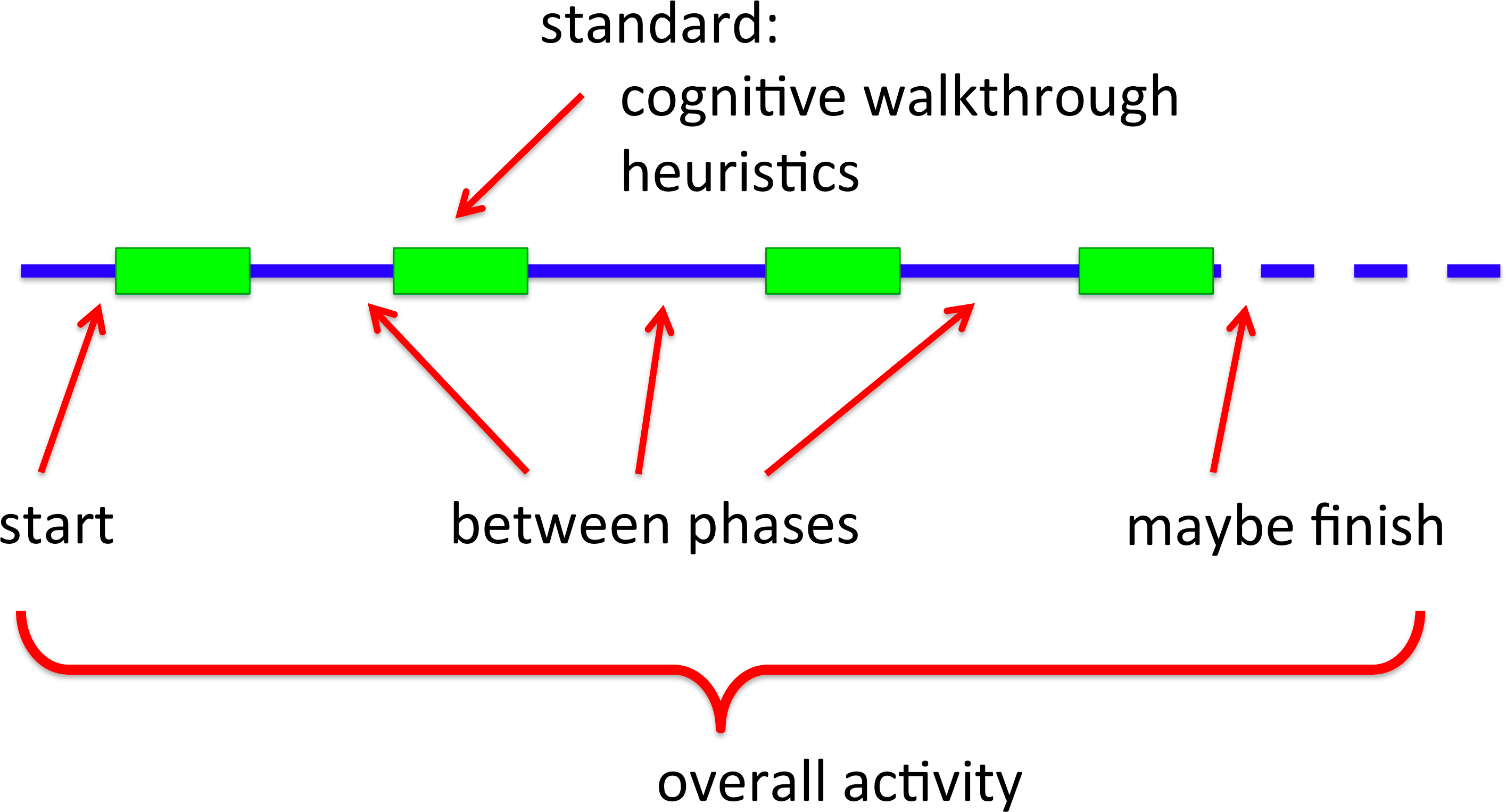

This paper proposes first steps in the development of practical techniques for the expert evaluation of long-term interactions driven by the need to perform expert evaluation of such systems in a consultancy framework. Some interactions are time-limited and goal-driven, for example withdrawing money at an ATM. However, these are typically embedded within longer-term interactions, such as with the banking system as a whole. We have numerous evaluation and design tools for the former, but long-term interaction is less well served. To fill this gap new evaluation prompts are presented, drawing on the style of cognitive walkthroughs to support extended interaction.

Keywords: long-term interaction, expert evaluation, cognitive walkthrough, interaction design

Template for extended walkthrough

References

[1] D. Benyon, 2010. Designing Interactive Systems. Addison Wesley

[2] T. Bickmore and R. Picard, 2005. Establishing and maintaining long-term human-computer relationships. ACM Trans. Comput.-Hum. Interact. 12(2):293–327. DOI: 10.1145/1067860.1067867

[3] M. Blackmon, P. Polson, K. Muneo and C. Lewis, 2002. Cognitive Walkthrough for the Web. In Proc. CHI 2002. 463–470. DOI: 10.1145/503376.503459

[4] P. Campos and N. Nunes, 2007. Practitioner Tools and Workstyles for User-Interface Design. IEEE Software, 24(1):73-80, Jan.-Feb. 2007. DOI: 10.1109/MS.2007.24

[5] S. Card, T. Moran and A. Newell, 1980. The keystroke-level model for user performance time with interactive systems. Communications of the ACM, 23, 396-410.

[6] A. Chamberlain and A. Crabtree (eds.), 2019. In Into the Wild: Beyond the Design Research Lab. Springer, pp.7–29.

[7] A. Cooper, 1999, The Inmates Are Running the Asylum. Sams, 1999

[8] D. Diaper & N. Stanton (eds.), 2004. The Handbook of Task Analysis for Human-Computer Interaction. Lawrence Erlbaum Associates.

[9] A. Dix and S. A. Brewster, 1994. Causing Trouble with Buttons. Ancilliary Proceedings of HCI'94, Glasgow, Scotland. Ed. D. England

[10] A. Dix, D. Ramduny and J. Wilkinson, 1998. Interaction in the Large. Interacting with Computers - Special Issue on Temporal Aspects of Usability. J. Fabre and S. Howard (eds). 11(1):9-32.

[11] A. Dix, J. Finlay, G. Abowd, and R. Beale, 2004. Human–Computer Interaction (3rd ed.). Pearson.

[12] A. Dix, D. Ramduny-Ellis and J. Wilkinson, 2004. Trigger Analysis - understanding broken tasks. Chapter 19 in The Handbook of Task Analysis for Human-Computer Interaction. D. Diaper and N. Stanton (eds.). Lawrence Erlbaum Associates, pp. 381-400

[13] A. Dix (2008). Theoretical analysis and theory creation, Chapter 9 in Research Methods for Human-Computer Interaction, P. Cairns and A. Cox (eds). Cambridge University Press, pp.175–195. ISBN-13: 9780521690317

[14] A. Dix and J.Leavesley (2015). Learning Analytics for the Academic: An Action Perspective. Journal of Universal Computer Science (JUCS), 21(1):48-65.

[15] S. Easterbrook (ed.). 1993. CSCW: Cooperation or Conflict? Springer.

[16] P. Fitts, 1954. The information capacity of the human motor system in controlling the amplitude of movement. Journal of Experimental Psychology, 47, 381-391.

[17] S. Gibbons, 2017. Service Design 101. Nielsen Norman Group. https://www.nngroup.com/articles/service-design-101/

[18] D. Gibson, N. Ostashewski, K. Flintoff, S. Grant and E. Knight, 2015. Digital badges in education. Educ Inf Technol 20:403–410. DOI: 10.1007/s10639-013-9291-7

[19] Jonathan Grudin. 1988. Why CSCW applications fail: problems in the design and evaluationof organizational interfaces. In Proc. CSCW ’88. ACM, pp.85–93. DOI: 10.1145/62266.62273

[20] Y. Guiard and M. Beaudouin-Lafon (eds.), 2004. Fitts’ law fifty years later: Application and contributions from human-computer interaction. A special issue of the International Journal of Human-Computer Studies, 61 (6).

[21] C. Heath and P. Luff. 1991. Collaborative activity and technological design: task coordination in London underground control rooms. In Proceedings of ECSCW’91. Kluwer Academic Publishers, USA, 65–80.

[22] S. Henry, 2007. Accessibility in User-Centered Design: Example Scenarios. Just Ask: Integrating Accessibility Throughout Design. Lulu.com. http://www.uiaccess.com/accessucd/scenarios_eg.html

[23] Interaction Design Foundation, 2019. The Principles of Service Design Thinking - Building Better Services. (accessed 29/1/2020). https://www.interaction-design.org/literature/article/the-principles-of-service-design-thinking-building-better-services

[24] N. Iivari, Marianne Kinnula, Leena Kuure, and Tonja Molin-Juustila. 2014. Video Diary as a Means for Data Gathering with Children - Encountering Identities in the Making. International Journal of Human-Computer Studies 72, 5: 507--521

[25] V. Kaptelinin and B. Nardi, 2012. Activity Theory in HCI: Fundamentals and Reflections. Morgan and Claypool

[26] H. Khalid and A. Dix, 2010. The experience of photologging: global mechanisms and local interactions. Pers Ubiquit Comput 14:209–226. DOI: 10.1007/s00779-009-0261-4

[27] A. Kidd, 1994. The marks are on the knowledge worker. In Proceedings of CHI ’94. ACM, 186–191. DOI: 10.1145/191666.191740

[28] S. Kujala, V. Roto, K. Väänänen-Vainio-Mattila, and A. Sinnelä, 2011. Identifying hedonic factors in long-term user experience. In Proceedings of the 2011 Conference on Designing Pleasurable Products and Interfaces (DPPI ’11). ACM, Article 17, pp. 1–8. DOI: 10.1145/2347504.2347523

[29] C. Lallemand, 2012. Dear Diary: Using Diaries to Study User Experience. User Experience magazine, August 2012. User Experience Professionals Association (UXPA) https://uxpamagazine.org/dear-diary-using-diaries-to-study-user-experience/

[30] I. Leite, C. Martinho and A. Paiva , 2013. Social Robots for Long-Term Interaction: A Survey. International Journal of Social Robotics, 5:291–308

[31] C. Lewis, P. Polson, C. Wharton and J. Rieman, 1990. Testing a walkthrough methodology for theory-based design of walk-up-and-use interfaces. In Proc. CHI’90. ACM, 235–242. DOI:10.1145/97243.97279

[32] R. Kohavi and S. Thomke, 2017. The Surprising Power of Online Experiments. Harvard Business Review, September 2017, pp.74–82.

[33] I. S. MacKenzie, 2003. Motor behaviour models for human-computer interaction. In J. M. Carroll (ed.) HCI models, theories, and frameworks: Toward a multidisciplinary science, pp. 27-54. San Francisco: Morgan Kaufmann.

[34] Y. Malhotra. 1998. Business Process Redesign: An Overview. IEEE Engineering Management Review, 26(3), Fall 1998.

[35] J. McCarthy and P. Wright, 2007. Technology as Experience. MIT Press.

[36] L. Myers, 1987. Proposed Military Standard for Task Analysis. Technical Memorandum 13-87. U.S. Army Human Engineering Laboratory, Maryland US.

[37] J. Nielsen and R. Mack (eds), 1994. Usability Inspection Methods, John Wiley & Sons Inc

[38] L. Nielsen, 2013, Personas. In: The Encyclopedia of Human-Computer Interaction, 2nd Ed. M. Soegaard and R. Dam,(eds.). The Interaction Design Foundation. http://www.interaction-design.org/encyclopedia/personas.html

[39] D. Norman, 1988. Psychology of Everyday Things (later The Design of Everyday Things). New York: Basic Book, 1988.

[40] OpenBadges. (accessed 27/1/2019). https://openbadges.org/

[41] T. Palmer, 2019. The 2019 Design Tools Survey. https://uxtools.co/survey-2019

[42] F. Paternò, C. Mancini an S. Meniconi 1997. ConcurTaskTrees: A Diagrammatic Notation for Specifying Task Models. In: Howard S., Hammond J., Lindgaard G. (eds) Human-Computer Interaction INTERACT ’97, Springer.

[43] F. Paternò, 2000. Model-Based Design and Evaluation of Interactive Applications. Springer.

[44] Peer 2 Peer University. (accessed 27/1/2019). https://www.p2pu.org/

[45] P. Polson, C. Lewis, J. Rieman and C. Wharton, 1992. Cognitive walkthroughs: A method for theory-based evaluation of user interfaces. International Journal of Man–Machine Studies, 36:741–73,.

[46] D. Ramduny-Ellis, A. Dix, P. Rayson, V. Onditi, I. Sommerville and J. Ransom, 2005. Artefacts as designed, artefacts as used: resources for uncovering activity dynamics. Cogn Tech Work 7:76–87 (2005). https://doi.org/10.1007/s10111-005-0179-1

[47] Y. Rogers and P. Marshall, 2017. Research in the Wild.. Synthesis Lectures on Human-Centered Informatics. Morgan and Claypool. Doi:10.2200/S00764ED1V01Y201703HCI037

[48] J-J Rousseau, 1762. The Social Contract.

[49] A. Shepherd (1998) HTA as a framework for task analysis, Ergonomics, 41:11, 1537-1552, DOI: 10.1080/001401398186063

[50] R. Spencer, 2000. The streamlined cognitive walkthrough method, working around social constraints encountered in a software development company. In Proc. CHI’00. ACM, 353–359. DOI: 10.1145/332040.332456

[51] R. Thomas, 1998. Long Term Human-Computer Interaction - an exploratory perspective. Springer.

[52] Usability.gov, 2019. Heuristic Evaluations and Expert Reviews. (accessed, 26/1/2019). https://www.usability.gov/how-to-and-tools/methods/heuristic-evaluation.html

[53] Websitetips.com, 2019. Lorem Ipsum... Who? (accessed 27/1/2019). http://websitetips.com/articles/copy/lorem/

[54] C. Wharton, J. Rieman, C. Lewis and P. Polson, 1994. The cognitive walkthrough: a practitioner’s guide. In Usability Inspection Methods. John Wiley, New York

|