|

![]()

the Petabox [5]

* An early web copy of this article had 3.8 terrabytes for some reason. 300 mbytes is what is in the dust article: 10 kbytes/second (ISDN quality) x 30 million seconds per year

Some years ago I did some back of the envelope calculations on what it would take to store an audio-visual record of your complete life experiences [1]. The figure was surprisingly small a mere 300 megabytes per year*. Given extrapolations Moore's law doubling in storage capacity our whole life's experiences in no more than a gain of dust in 70 years time.

Of course this is about recording video and audio that come into the body - what about what is inside our heads?

Our brains contain about 10 billion neurons, each connected to between 1000 and 10,000 others. It is commonly assumed that our long-term memories are stored in this configuration, both what is connected to what and in the strength of those synaptic connections [2].

If this is the case we can calculate the maximum information content of the human brain! One way to envisage this is as an advanced brain scanner that records the exact configuration of our neurons and synapses at a moment in time - how much memory would it take to store this?

For each neuron we would need to know physically where it is, but these x,y,z coordinates for each neuron turn out to be the least of the memory requirements needing a mere 90 bits giving us a 1 in billion accuracy for each coordinate. That is about 120 megabytes for all the neurons.

The main information is held, as noted, in what is connected to what. To record this digitally we would need to have for each of the 10,000 synapses of each neuron and 'serial number' for the neuron it connects to and a strength. Given 10 billion neurons this serial number would need to be 34 bits and so if we store the synapse strength using 6 bits (0-63), this means 40 bits or 5 bytes per synapse, so 50,000 bytes per neuron and 500 thousand billion bytes for the whole brain state.

That is the information capacity of the brain is approximately 500 terrabytes or ½ a petabyte.

It is hard to envisage what half a petabyte is like in terms of information capacity.

One comparison would be with a books. The Bible (a big book!) takes about 4.5 Mbytes to store, so that our brain's capacity os equivalent to a billion bibles, about the number which stacked floor to ceiling would fill a medium sized church.

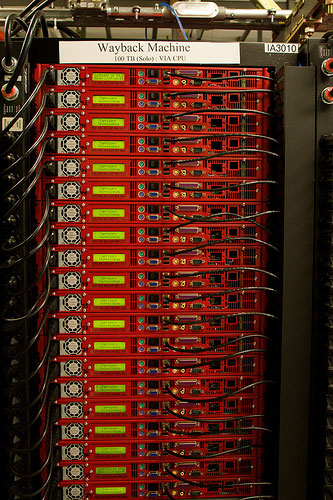

For a more computational comparison, the Internet Archive project stores dumps of the web donated by Alexa, an internet recommender and search company. These dumps are used for the 'Wayback Machine' that enables you to visit 'old' websites [3]. The current data comprises about 100 terrabytes of compressed data. The uncompressed size is not quoted on the archive.org web site, but assuming a compression factor of around 30% ad also noting that the dump will not be complete, we can see the current web has a similar level of information capacity (but more in 'data' and less in link interconnections) to the brain.

As an alternative way to 'size' the web, consider that Google currently (Aug. 2005) indexes about 8 billion pages, assuming this is perhaps half the total accessible pages and that each web page inclduing images averages 40K [4], we get 640 terabytes, just over 1/2 petabyte.

This is not to say the web is brain-like (although has some such features), nor that the web in any way emulates the brain, but shear information capacity is clearly not the defining feature of the human brain.

In order to store data such as movies the Internet Archive project has designed a low-cost large-scale storage unit called the Petabox [5]. Large 19 inch racks store 100 Terabytes of data each so that 5 tall racks or 10 smaller, filing-cabinet-sized racks would store the 500 Terabytes of our brain. In principle, if you had a brain scanner that could map our neuron connections we could store these in a small machine room ... then perhaps through nano-technology one restore the patterns like a browser back button after a bad day :-/

If such far fetched technology could exist it would mean people could effectively 'fork' their lives have multiple streams of memory that share beginning but have different experiences thereafter, time sharing the body ... sounds like good science fiction!

Our brains are not just passive stores of information but actively changing. Discussions of the power of the massive parallelism of the brain's thinking compared with the blindingly fast, but blinkedly sequential single track of electronic computation is now passé. However, having noted that the information capacity is not that great, what about the computational capacity - how does it rate.

At a simplified level each neurons level of activation is determined by pulses generated at the (1000 to 10,000) synapses connected to it. Some have a positive excitatory effect some are inhibitory. A crude model simply adds the weighted sum and 'fires' the neuron if the sum exceeds a value. The rate of this activity, the 'clock period' of the human brain is approximately 100 Hz - very slow compared to the GHz of even a home PC, but of course this happens simultaneously for all 10 billion neurons!.

If we think of the adding of the weighted synaptic value as a single neural operation (nuop) then each neuron has approximately 10,000 nuops per cycle, that is 1mega-nuop per second. In total the 10 billion neurons in the brain perform 10 peta-nuop per second.

Now a nuop is not very complicated a small multiplication and an addition, so a 1 GHz PC could manage perhaps emulate 100 million nuop per second. Connected to the internet at any moment there are easily 100 million such PCs, that is a combined computation power of 10,000 million million nuop/sec ... that is 10 peta-nuops ... hmm

Again one should not read too much into this, the level of interconnectivity of those 100 million PCs is far weaker than out 10 billion neurons, good for lots of local computation (how many copies of Internet Expolorer?), but poorer at producing synchronised activity except where it is centrally orchestrated as in a webcast. In our brain each neuron is influenced by 10,000 others 100 times a second. If we imagined trying to emulate this using those PCs, each would require 100Kbyte per second of data flowing in and out (and in fact several times that for routing information), but more significantly the speed of light means that latencies on a global 'brain' would limit it to no more than 10 cycles per second.

For an electronic computer to have computational power even approaching the human brain it would need those 10 million PCs computation much closer to each other ... perhaps Bejing in a few years time ... or even Japan.

And talking of Japan ...

Let's recap, the computation of the brain is about 10 peta-nuop/sec. The speed of supercomputers is usually measured in flops floating-point operations per second. A nuop is actually a lot simpler that a floating-point operations, but of course our brains does LOTS of nuops. However, supercomputers are catching up. The fastest supercomputer today is IBM's Blue Gene computer hitting a cool 136.8 tera-flops, still a couple of orders of magnitude slower than 10 peta-nuop brain, but Japan has recently announced it is building a new supercomputer to come online in March 2011. How fast? Guess ... you're right 10 peta-flops [6].

Philosophers of mind and identity have long debated whether our sense of mind, personhood or consciouness are intrinsic to our biological nature or whether a compouter system emulating the brain would have the same sense of consciouness as an emergent property of its complexity [7] ... we are nearing the point when this may become an empirically testable issue!

Of course, this does not mean that the web or a new super computer in some way is like or equal to the human mind. What it does mean is that the specialness of the human brain is not because of simple capacity or speed. If size were all that matters in cognition, we have already been beaten by our own creations. Really the specialness of our minds is in their organisation and the things that make us human beyond simple information: compassion, pain, heroism, joy - we are indeed fearfully and wonderfully made.

notes and references

- A. Dix (2002). the ultimate interface and the sums of life?.

Interfaces, issue 50, Spring 2002. pp. 16

http://www.hcibook.com/alan/papers/dust2002/ - There is some debate whether the glial cells whether the glial cells, powerhouses for the neurons, themselves are part of the brain's memory process. These are about 10 times more numerous than neurons, but do not have a similar level of interconnection so would not add significantly to the total capacity.

On the other side, it is certainly the case that neurons do not operate totally independently, but instead are in larger loosely defined assemblies. This is important, if one neuron or one synapse breaks we do not lose any identifiable item of memory, instead the memory structure is more redundantly stored. This means that the actual information capacity is probably several orders of magnitude smaller than the 1/2 petabyte estimate. - The Internet Archive was founded in order to preserve digital repositories in the same way as a traditional historical archive preserves documents and artefacts. As well as the web itself the project is producing archives of audio and video materials.

http://www.archive.org/ - My own 'papers' directory contains approx 180 html files comprising 2.3 Mbytes and a further 800 files (JPEG, GIF, PDF, etc.) totalling a further 73 Mbytes of which the images are about 4.7 Mbytes. That is an average of around 13K of html text per web page and a further 27K of images and nearly 400K of additional material. Looking just at web page including images this is about 40K per page.

- The Petabox was designed for the Internet Archive project, particularly as the archiove expanded into audio-visual materials. A spin-off company Capricorn Technologies is also selling Petabox products commercially.

http://www.archive.org/web/petabox.php - The Guardian, Tuesday July 26, 2005. p. 3. "How 10 quadrillion sums a second will make computer the world's fastest", Justin McCurry. http://www.guardian.co.uk/international/story/0,,1536006,00.html

- See John Searle's "The Mystery of Consciouness" for an overview of several positions on the relationship between brain and consiouness, although do beware Searle is better at noticing the weaknesses in other people's arguments than his own! Whilst there is little stomach in modern philosophy for non-corporeal minds/soul/spirit as part of theor accounts of consciouness, some do look at quantum effects to explain some of the amazing qualities of the human mind. For example, Penrose postulates whether the tiny cytoskeleton's within cells have a role. If this were the case and superimposed quantum states wer a significant issue in the brain's operation the figures in this article would have to be multiplied enormously or may be infinitely. However, the broader tendency is to assume that our consciouness is an proeprty of the more traditionally understood biological activity of neurons. Some, such as Dennett, would expect that a simulation of the brain would have the same level of consciouness as a living brain. However Dennett sort of sees our experiences of consciouness and being as a kind of misapprehension or misinterpretation anyway. Searle himself argues that a non-biological mind would be different ... but the arguments end in mutually refererential cycles! At heart is the issue of 'qualia' the actual 'feltness' of things rather than the computational responses we have to stimuli.