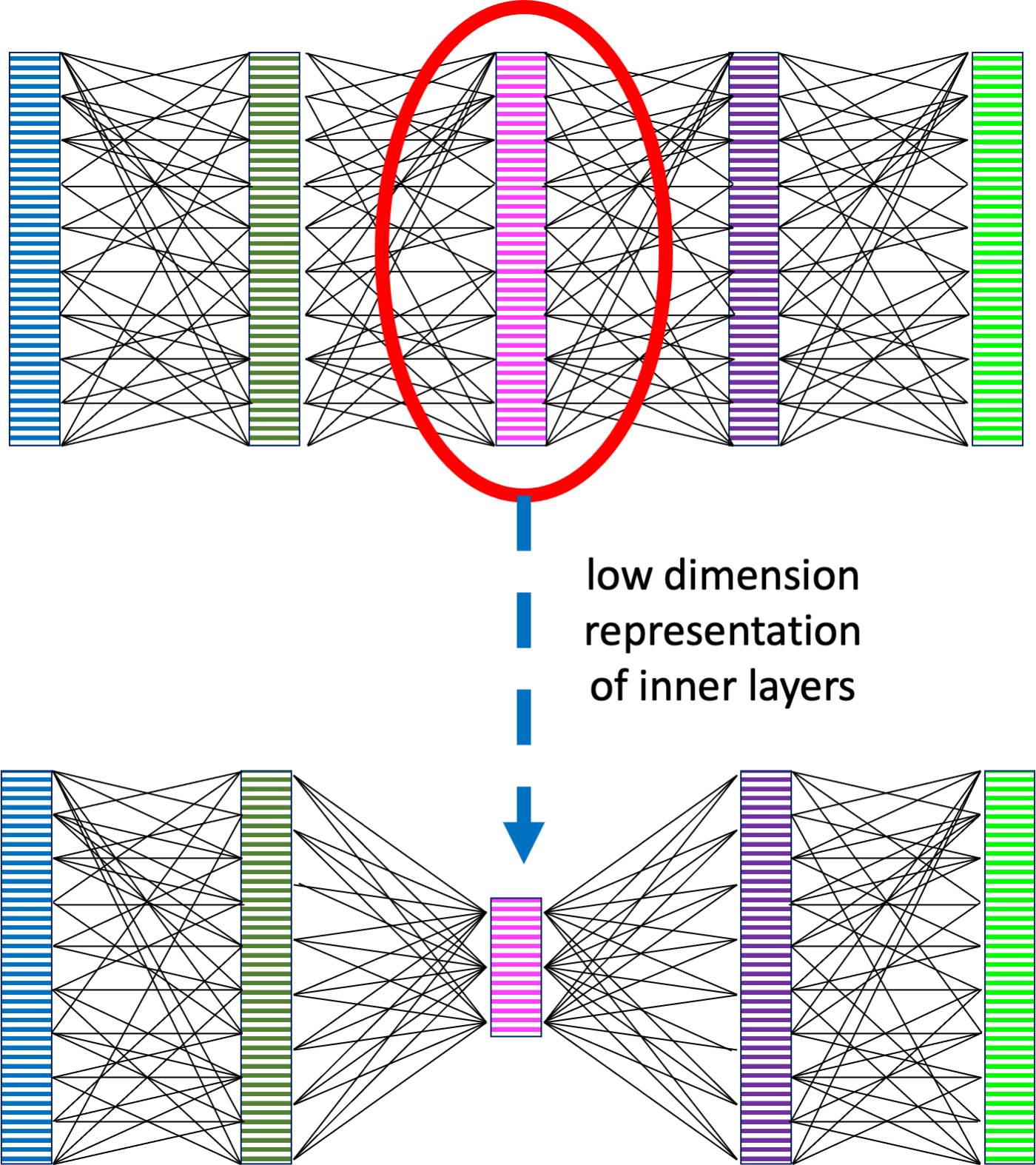

LoRA (low-rank adaptation of large language models) performs dimensional reduction on inner layers of the deep neural network used in a large language model thus reducing the cost of execution and retraining. This has economic and environmental advantages.

Used in Chap. 23: page 370

Also known as: low-rank adaptation of large language models

Used in glossary entries: deep neural network, dimension reduction, large language model

Reducing the dimensionality of inner layers to reduce re-training and runtime costs