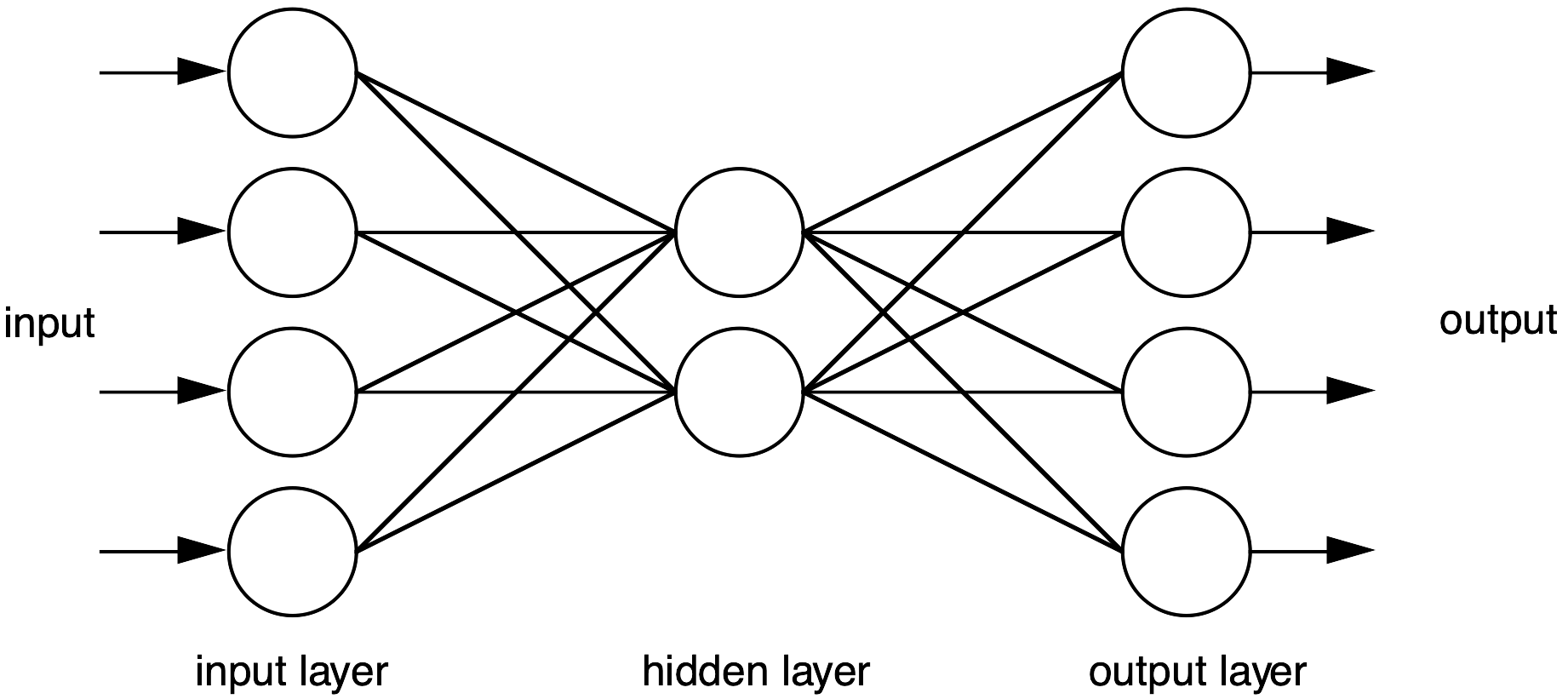

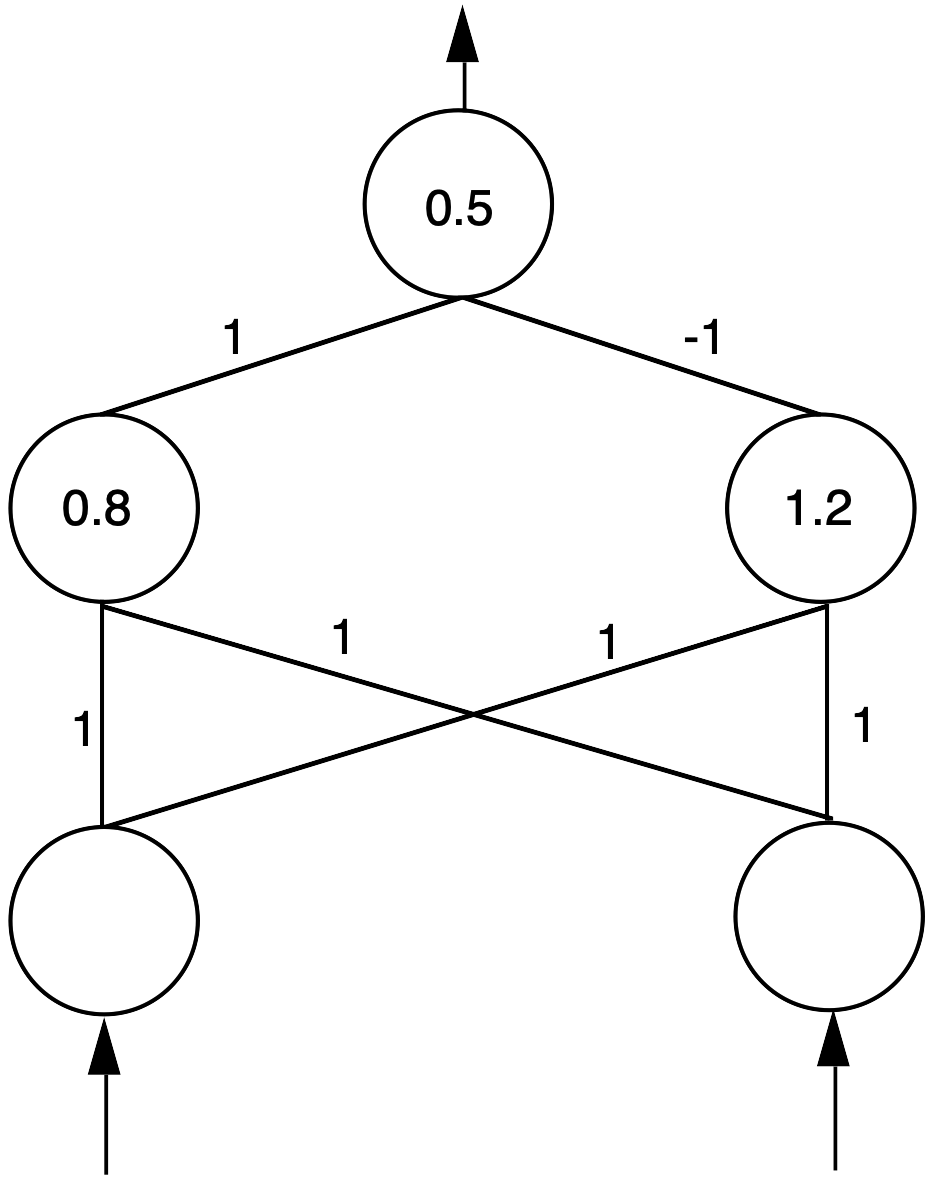

A multi-layer perceptron is an early multi-layer neural network architecture that had precisely three layers: input, hidden and output. The combination of sigmoid threshold functions and backpropagation enabled the inner hidden layer to learn weights, thus beginning the modern field of neural networks.

Used in Chap. 6: pages 74, 75, 76, 81; Chap. 7: page 99

Used in glossary entries: backpropagation, multi-layer neural network, neural network, sigmoid function

A multi-layer perceptron architecture.

A simple multi-layer perceptron to solve the XOR problem.