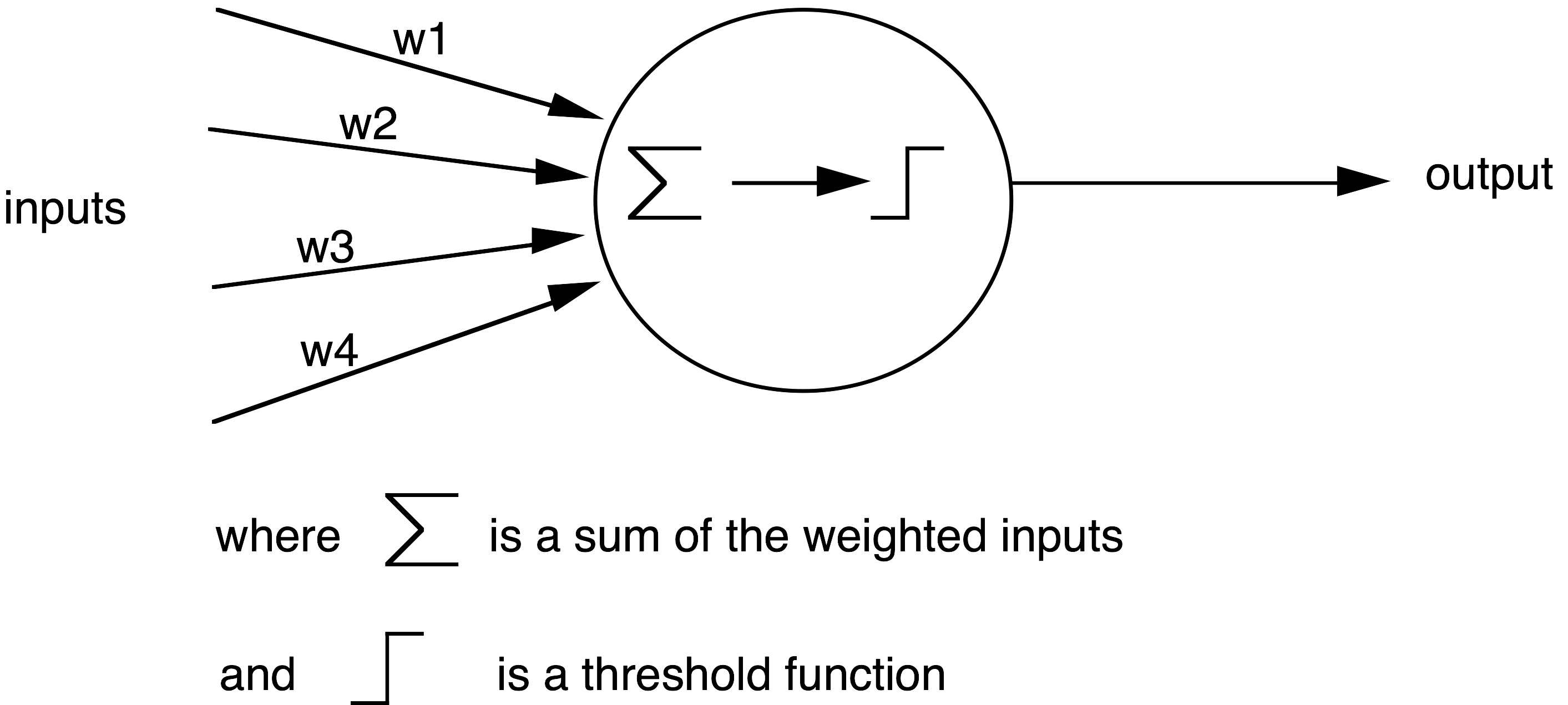

The perceptron is the earliest kind of artificial neuron. It has a number of inputs and weights for each. In operation the inputs are multipled by the weights and summed to give an overall input activation. This is then compared with a threshold, and if the input activation exceeds the threshold, the perceptron fires. A simple percepttron arranged in a single layer cannot solve complex problems such as the XOR problem, but multi-layer perceptrons are harder to train. For many years this limited practical development of artificial neural networks until the adoption of non-linear threshold functions and the development of backpropagation.

Used in Chap. 6: pages 74, 75, 83, 84; Chap. 7: page 92; Chap. 8: pages 102, 103; Chap. 9: page 127

Used in glossary entries: artificial neural networks, backpropagation, threshold, XOR problem

A single perceptron