Outputs accounted for 70% of the overall REF profile in Panel B, and hence the most important as well as the most voluminous and time-consuming part of the process. Various kinds of outputs were allowed, but the vast majority were some form of article (journal and conference), although there were a small number of other types: books, government reports, software, patents, international standards and artistic installations.

Pre-Submission

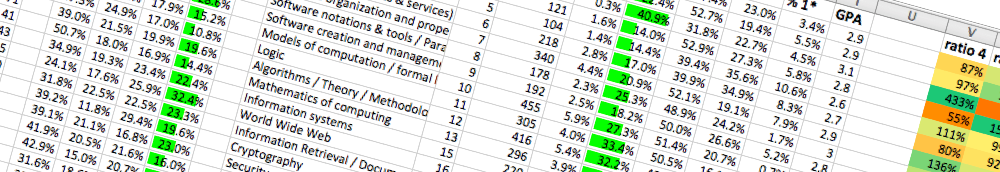

As well as standard REF submission meta-data (DOI, dates, authorship, etc.), SP11 asked institutions to add an ACM topic code to each submission coded into the 100 word text field.

Output Allocation

An automatic allocation system was used based on the ACM topic codes. These were used in two ways:

- Rather than use self-declared research groups within UoAs, the ACM topics were used to cluster staff into automatic groups.

- Groups of outputs for each UoA were automatically allocated to panellists based on self-declared levels of expertise for the different ACM topic areas. Each output was allocated to three panellists for grading.

The advantages of this automatic system were:

It worked uniformly for all institutions irrespective of whether the UoA provided research group information.

It was very fast, SP11 allocations were available in early Jan 2014 virtually immediately after the data was available. In contrast, other sub-panels’ hand allocation processes took up to a month.

It should be noted that the panellists had to ‘spread’ their expertise quite widely in order to allow a later normalisation algorithm (see below). This meant, for example, that less than 20% of my own output allocation was in my core area of expertise, and that typically an output would have at best one expert and two non-expert reviewers.

This practice was very different from other panels, which gave far greater emphasis on panellist expertise.

Scoring Spreadsheets and Information

Personal scoring spreadsheets were generated and downloaded from the REF secure web system. Apart from the sub-panel chairs, each panelist saw only the scoring information for the outputs allocated to them.

The spreadsheets available are almost identical to the public domain files available from the REF site (title, venue, type, length, date, DOI/ISSN/ISBN). However, in addition the scoring spreadsheets included the staff member associated with the particular output and blank scoring and comment fields to be filled in by the panellist.

In particular, the spreadsheet did include Scopus citation data for each output, but NOT Google Scholar citation data. SP11 had wished to have the Google Scholar data available, but HEFCE were not able to arrange this with Google.

It should also be noted that the downloaded spreadsheets were initially ordered by staff member within institution. Panellists were free to re-order this spreadsheet as they wished, but the default reading order was within institution.

My own post-hoc analysis suggests that there was a significant level of implicit institutional bias. Given well known anchoring and halo effects in expert judgment, it seems likely that reading multiple outputs from the same institution was a contributing factor.

Calibration and Scoring Instructions

A small number of outputs were initially scored and then discussed by all panellists in order to create a level of shared criteria. However, the final normalisation process meant that this was less critical than for other panels.

While the venue and citation data were available, it was emphasised that these were not to be used as key indicators of quality, more that they could be used by panellists as part of their normal professional judgement in accessing the quality of the submission, but that the primary consideration was the content of the outputs. Morris Sloman’s (SP11 deputy chair) post-hoc analysis does show that that journal outputs were slightly more successful in terms of 4* scores; however this is likely to be due to the more rigorous review processes leading to higher quality content. One would also expect that higher quality outputs would be submitted to more prestigious venues, but the aim was that the venue was NOT used as a proxy for content quality.

Each element (originality, significance, and rigour) was separately scored on a 12 point scale and then the overall paper given a 0-12 grade. These were interpreted roughly as follows:

| 0 | no research or disallowed |

| 1–3 | 1 star |

| 4–6 | 2 star |

| 7–9 | 3 star |

| 10–12 | 4 star |

Timetable

All panel B sub-panels were asked to complete 25% of outputs by a deadline in order to allow cross-panel calibration. A later deadline was given for 50% completion. In order to do this as a relatively systematic sample, each SP11 panellist initially graded all the first outputs for each staff member (assuring over 25%), and then in second pass the fourth output for each staff member, and then to complete outputs 2 and 3 to make up the remaining 50%.

Scoring Resolution

As noted each output was read and scored independently by three panellists. Where there was substantial disagreement, panellists were encouraged to discuss the output and the reasons for their scoring. This sometimes highlighted an aspect which one or more panellist had missed, or sometimes more fundamental differences in individual notions of quality.

As the scoring was to be normalised, panellists were encouraged not to use this to point-change scores, as it was important to retain their own rank ordering of outputs.

Importantly, as the normalisation process was carried out after this difference resolution process, we were not aware during the process of the actual final grade of an output, not for that matter ever knew the final post-normalisation grades of any outputs whatsoever.

Normalisation

Some panellists were clearly more generous, or more severe than others. Some tended to mark more towards the centre, some had a wider range of scores. For most panels these differences were managed through discussions, debate and negotiation. In contrast, the computing sub-panel used an algorithm.

In broad terms the algorithm compared each panellists’ scores with the average scores of other panellists, and then used this to create a table converting the panellist’s individual scores to normalised scores. For example, if a panellist was particularly generous, then their assigned score of 7 might end up being translated to a normalised score of 5.8.

In addition, the level to which these normalised scores were consistently close to the average was used to create a measure of how ‘accurate’ each panellist was, and this used to give a weighting when eventually averaging scores. Effectively panellists who scored more closely to the average were regarded as more reliable and hence given more weighting.

The weighted average score following this process was then computed and this used to determine the final star rating of each output.

Note that in order for this algorithm to work, there need to be a high level of overlap and mixing between different panellists’ output allocations. If panellists kept too close to their expertise area, there would not have been sufficient overlap, and the algorithm would not have been able to normalise between areas. This need was what drove the requirements for panellists to ‘spread’ expertise. In other words we favoured algorithmic optimisation over human expertise.

Normalisation Algorithm Assumptions

Given the importance of this stage, it is worth listing the assumptions behind the algorithm used.

- There is some single ‘real’ quality grade for each output.

- Each panellist creates estimates of this real quality value.

- Panellists have different profiles (more/less generous, etc.; as described above).

- Panellists vary in their accuracy of judgement.

- Each panellist is deemed equally good as an estimator of all outputs.

Note the last of these assumptions, effectively means that expertise is ignored.

Textual Feedback

Textual feedback was provided to institutions on each UoA. Typically only university senior management and heads of school or department saw this.

Previous RAE feedback had been criticised for being too anodyne, and the guidance was to try to be more specific highlighting particular areas of strength.

As the ACM topic codes had been collected and were uniform across institutions, these were used to structure the output feedback. Roughly the feedback reflected areas which had particularly high levels of 4*/3* outputs relative to the overall UoA profile. Typically, only stronger not weaker areas were mentioned.

If areas were small they were explicitly not mentioned, irrespective of their strength, as this would have risked revealing individual staff member’s scores.

In addition, those writing the feedback may have only graded a subset of the overall outputs for the UoA; this may have influenced which topics they emphasised.

That is, while areas mentioned as of particular strength could be assumed to be strong, absence from the feedback should not have been taken to mean weakness.

It is clear from subsequent conversations that this feedback was not widely understood.

- Heads of school/department did not appear to understand the map between the feedback and their research groups.

- Neither did they seem to understand the lower limit, so that if a small research group was not mentioned it was assumed to have been weak.

- This was probably even more problematic for faculty deans and university PVCs, given this was specific to SP11.