Note all answers refer to SP11 unless otherwise stated.

Q. Were outputs anonymised?

No. It would be possible to have had some level of weak anonymisation (remove names from title pages), but hard to imagine full anonymisation as in some double-blind review mechanisms. Certainly in SP11 there was no such anonymisation.

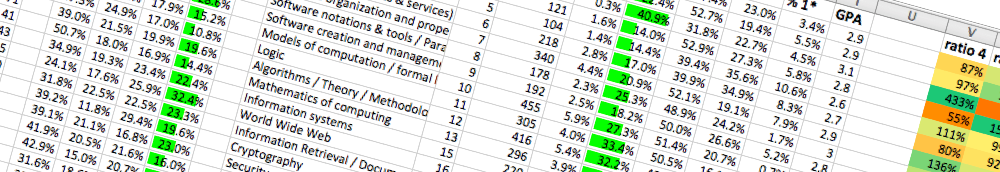

Furthermore the scoring spreadsheets included both the staff member for whom the output was registered, and the institution.

Q. Was it better to submit journal articles than conferences?

The simple answer is No, all outpost were assessed based on the content of the submitted outputs.

However, in Morris Sloman’s analysis of SP11 results, it is evident that journal outputs were more likely to be scored 4* than conference outputs. there are several reasons for this:

- Journal papers are often longer, which gives more space to demonstrate rigour, which, for many panelists, was the major criterion.

- Journal papers have often been through a more sustained review process, with multiple revisions by the authors, which is likely to increase the ultimate quality of the content.

Q. Are papers submitted to the top venue in afield guaranteed to get 4*

No. The assessment was based primarily on the quality of the content of the outputs as delivered to REF. Indeed, the SP11 panel report notes that some apparently high ranking venues had surprisingly poor papers.

This said, a venue with a known rigorous review process would increase confident in the correctness of methods and porous. Likewise the appearance in highly rated venues, could be evidence of the significance of the work within its area. In the end panellists were asked to use their professional judgement, as they would in assessing the reliability and quality of any publication they were reading in their normal research activity.