Simple techniques can help, but even mathematicians can get it wrong.

Simple techniques can help, but even mathematicians can get it wrong.

It would be nice if there was a magic bullet and all of probability and statistics would get easy. Hopefully some of the videos I am producing will make statistics make more sense, but there will always be difficult cases – our brains are just not built for complex probabilities.

However, it may help to know that even experts can get it wrong!

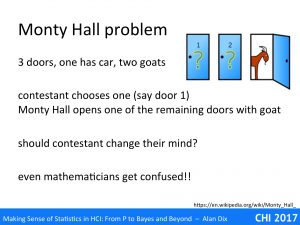

In this video we’ll look at two complex issues in probability that even mathematicians sometimes find hard: the Monty Hall problem and DNA evidence. We’ll also see how a simple technique can help you tune your common sense for this kind of problem. This is NOT the magic bullet, but sometimes may help.

There was a quiz show in the 1950s where the star prize car. After battling their way through previous rounds the winning contestant had one final challenge. There were three doors, one of which was the prize car, but behind each of the other two was a goat.

The contestant chose a door, but to increase the drama of the moment, the quizmaster did not immediately open the chosen door, but instead opened one of the others. The quizmaster, who knew which was the winning door, would always open a door with a goat behind. The contestant was then given the chance to change their mind.

- Should they stick with the original choice?

- Should they swop to the remaining unopened door?

- Or doesn’t it make any difference?

What do you think?

One argument is that the chance of the original door being a car was one in three, whether the chance of it being in the other door was 1 in 2, so you should change. However the astute may have noticed that this is a slightly flawed probabilistic argument as the probabilities don’t add up to one.

A counter argument is that at the end there are two doors, so the chances are even which ahs the car, so there is no advantage to swopping.

An information theoretic argument is similar – the doors equally hide the car before and after the other door has been opened, you have no more knowledge, so why change your mind.

Even mathematicians and statisticians can argue about this, and when they work it out by enumerating the cases, do not always believe the answer. It is one of those case where one’s common sense simple does not help … even for a mathematician!

Before revealing the correct answer, let’s have a thought experiment.

Imagine if instead of three doors there were a million doors. Behind 999,999 doors are goats, but behind the one lucky door there is a car.

- I am the quizmaster and ask you to choose a door. Let’s say you choose door number 42.

- Now I now open 999,998 of the remaining doors, being careful to only open doors hiding goats.

- You are left with two doors, your original choice and the one door I have not opened.

Do you want to change your mind?

This time it is pretty obvious that you should. There was virtually no chance of you having chosen the right door to start with, so it was almost certainly (999,999 out of a million) one of the others – I have helpfully discarded all the rest so the remaining door I didn’t open is almost certainly it.

It is a bit like if before I opened the 999,998 goats doors I’d asked you, “do you want to change you mind, do you think the car is precisely behind door 42, or any of the others.”

In fact exactly the same reasoning holds for three doors. In this case there was a 2/3 chance that it was in one of the doors you did not choose, and as the quizmaster I discarded one that had a goat from these, it is twice as likely that it is behind the door did not open as your original choice. Regarding the information theoretic argument: the q opening the goat doors does add information, originally there were possible goat doors, after you know they are not.

Mind you it still feels a bit like smoke and mirrors with three doors, even though, the million are obvious.

Using the extreme case helps tune your common sense, often allowing you to see flaws in mistaken arguments, or work out the true explanation.

It is not and infallible heuristic, sometimes arguments do change with scale, but often helpful.

The Monty Hall problem has always been a bit of fun, albeit disappointing if you were the contestant who got it wrong. However, there are similar kinds of problem where the outcomes are deadly serious.

DNA evidence is just such an example.

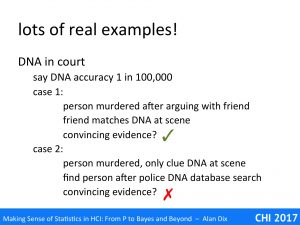

Let’s imagine that DNA matching has an accuracy of on in 100,000.

There has been a murder, and remains fo DNA have been found on the scene.

Imagine now two scenarios:

Case 1: Shortly prior to the body being found, the victim had been known to have having a violent argument with a friend. The police match the DNA of the frend with that found at the murder scene. The friend is arrested and taken to court.

Case 2: The police look up the DNA in the national DNA database and find a positive match. The matched person is arrested and taken to court.

Similar cases have occurred and led to convictions based heavily on the DNA evidence. However, while in case 1 the DMA is strong corroborating evidence, in case 2 it is not. Yet courts, guided by ‘expert’ witnesses, have not understood the distinction and convicted people in situations like case 2. Belatedly the problem has been recognised and in the UK there have been a number of appeals where longstanding cases have been overturned, sadly not before people have spent considerable periods behind bars for crimes they did not commit. One can only hope that similar evidence has not been crucial in countries with a death penalty.

If you were the judge or jury in such a case would the difference be obvious to you?

If not we van use a similar trick to the Monty Hall problem, instead here lets make the numbers less extreme. Instead of a 1 in 100,000 chance of a false DNA match, let’s make it 1 in 100. While this is still useful, albeit not overwhelming, corroborative evidence in case 1, it is pretty obvious that of there are more than a few hundred people in the police database, the you are bound to find a match.

It is a bit like if a red Fiat Uno had been spotted outside the victim’s house. If the arguing friend’s car had been a red Fiat Uno it would have been good additional circumstantial evidence, but simply arresting any red Fiat Uno owner would clearly be silly.

If we return to the original 1 in 100,000 figure for a DNA match, it is the same. IF there are more than a few hundred thousand people in the database then you are almost bound to find a match. This might be a way to find people you might investigate looking for other evidence, indeed the way several cold cases have been solved over recent years, but the DNA evidence would not in itself be strong.

In summary, some diverting puzzles and also some very serious problems involving probability can be very hard to understand. Our common sense is not well tuned to probability. Even trained mathematicians can get it wrong, which is one of the reasons we turn to formulae and calculations. Changing the scale of numbers in a problem can sometimes help your common sense understand them.

In the end, if it is confusing, it is not your fault: probability is hard for the human mind; so. if in doubt, seek professional help.