This is the point where I nail my colours to the mast – should you use traditional statistics or Bayesian methods? With all the controversy in the media about the ‘statistical crisis’ should one opt for alt-stats or stay with conservative ones? Of course the answer will partly be ‘it depends’, but for most purposes I think there is a best answer …

This is the point where I nail my colours to the mast – should you use traditional statistics or Bayesian methods? With all the controversy in the media about the ‘statistical crisis’ should one opt for alt-stats or stay with conservative ones? Of course the answer will partly be ‘it depends’, but for most purposes I think there is a best answer …

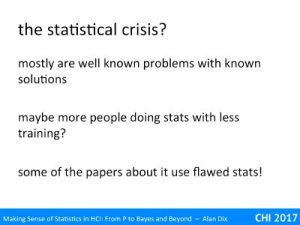

You may have seen stories about the ‘statistical crisis’ [Ba16]. A variety of papers and articles in the technical and sometimes even popular press highlighting general poor statistical practice. This has touched many disciplines, including HCI [Ca07, KN16].

Some have focused on the ‘replication crisis’, the fact that many attempts to repeat scientific studies have failed to reproduce the original (often statistically significant) outcomes. Other have focused on the statistics itself, especially p-hacking, where wittingly or unwittingly scientists use various means to ensure they get the necessary p<5% to enable them to publish their results.

Some of these problems are intrinsic to the scientific publishing process.

One is the tendency for journals to only accept positive results, so that non-significant results do not get published. This sounds reasonable until you remember that the p<5% means that on average one time in twenty you reject the null hypothesis (appear to have a positive finding) by shear chance. So if 100 scientists do experiments where there is no real effect, typically this will lead to 5 apparently ‘publishable’ effects.

Another problem is that the ‘publish or perish’ culture of academia means that researchers may ‘bend’ the facts slightly to get publishable results. To be fair this may be because they are convinced for other reasons that something is true, so they ‘gild the lily’ a little, ignoring negative indications and emphasising positive ones. As we saw previously, famous scientists have done this in the past, and because what they did happened to be true history has overlooked the poor stats (or looked the other way).

Most of the publicity on this has focused on traditional hypothesis testing. However, the potential problems in traditional statistics have been well known for at least 40 years and are to do with poor use or poor interpretation, not intrinsic weaknesses in the statistical techniques themselves.

There have been some changes, which may have led to the current level of publicity. One is the increasing publication pressures mentioned above. Another is that in years gone by when scientists needed to do statistics they would typically ask a statistician for advice, especially if it was at all unusual or unlike previous studies. Indeed, my own first job was at an agricultural engineering research institute, where, in addition to my main role doing mathematical and computational modelling, I was part of the institute’s statistics advisory team. Now time and budgetary constraints mean that research institutions are less likely to offer easy access to statistical advice and instead researchers reach for easy to use, but potentially easy to misuse, statistical packages.

However, there is also not a little hype amongst the genuine concern, including some fairly shaky statistical methods in some of the papers criticising statistical methods!

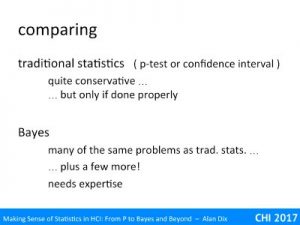

Most of the ‘bad press’ has focused on traditional statistics and in particular hypothesis testing – the dreaded p! As noted, this is largely due to well-understood issues when they are used inexpertly or badly. When used properly, traditional statistics (both hypothesis testing and confidence intervals) tend to be relatively conservative.

One reaction to this has been for some to abandon statistics entirely; famously (or maybe infamously) the journal Basic and Applied Social Psychology has banned all hypothesis testing [Wo15]. However, this is a bit like getting worried about the safety of a cruise ship sinking and so jumping into the water to avoid drowning. The answer to poor statistics is better statistics not no statistics!

The other reaction has been to loom to alternative statistics or ‘new statistics’; this has included traditional confidence intervals and also Bayesian statistics. Some of this is quite valid; the good use of statistics includes using the correct type of analysis for the kinds of data and information you have available. However, the advocacy of these alternatives can sometimes include an element of snake oil (paper titles such as “Using Bayes to get the most out of non-significant results” probably don’t help [Di14]).

Crucially, most of the problems that have been identified in the ‘statistical crisis’ also apply to alternative methods: selective publication, p-hacking (or various other forms of cherry picking), post-hoc hypotheses. In addition, reduced familiarity can lead to poorer statistical execution and reporting. Bayesian statistics in particular currently requires considerable expertise to be used correctly. Indeed, at the time of writing the Wikipedia page for Bayes Factor (Bayesian alternative to hypothesis testing) includes, as its central example, precisely this kind of inexpert use of the methods [W17].

There are good reasons why, even after 40 plus years of debate, most professional statisticians still use traditional methods!

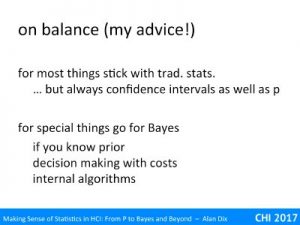

Based on all this my personal advice is that for most things stick with traditional statistics, but where possible always quote confidence intervals alongside any form of p-value (APA also recommend this [AP10]).

This is partly because, despite the potential misuse, there is still better general understanding of these methods and their pitfalls. You are more likely to do them right and your readers are more likely to have an idea of what they mean.

This said there are a number of circumstances when Bayesian statistics are not only a good idea, but the only sensible thing to do. These are usually circumstances where you know the prior and are involved in some sort of decision-making. For example, if a patient is in hospital you know the underlying prevalence of various diseases and so should use this as part of diagnostic reasoning. This also applies in the algorithmic use of Bayesian methods in intelligent or adaptive interfaces.

If you do choose to use Bayesian statistics, do ensure you consult an expert, especially if you are dealing with continuous values (such as completion times), as the theory around these is particularly complex (is is evident on the Wikipedia page!). Do be careful to that your prior is not simply meaning you are confirming your own bias. Also do be aware that the odds ratios that are taken as acceptable evidence seem (to a traditional statistician!) to be somewhat lax (and 5% sig. is already quite lax!), so I would advise using one of the more strict levels.

Whether you use traditional stats, p-values, confidence intervals, Bayesian statistics, or tea-leaf reading – make sure you use the statistics properly. Understand what you are doing and what the results you are presenting mean.

… and I hope this course helps!

Endnote

For a balanced view of Bayesian methods see the interview with Peter Diggle, President of the Royal Statistical Society [RS15]. However, it is perhaps telling that the Royal Statistical Society’s own mini-guide for non-statisticians, also called ‘Making Sense of Statistics”, avoids mentioning Bayesian methods entirely [SS10].

References

[AP10] APA (2010). Publication Manual of the American Psychological Association, Sixth Edition. http://www.apastyle.org/manual/

[Ba16] Baker, M. (2016). Statisticians issue warning over misuse of P values. Nature, 531, 151 (10 March 2016) doi:10.1038/nature.2016.19503

[Ca07] Paul Cairns. 2007. HCI… not as it should be: inferential statistics in HCI research. In Proceedings of the 21st British HCI Group Annual Conference on People and Computers: HCI…but not as we know it – Volume 1 (BCS-HCI ’07), Vol. 1. British Computer Society, Swinton, UK, UK, 195-201.

[Di14] Dienes, Z. (2014). Using Bayes to get the most out of non-significant results. Frontiers in Psychology, 5, 781. http://doi.org/10.3389/fpsyg.2014.00781

[KN16] Kay, M., Nelson, G., and Hekler, E. 2016. Researcher-Centered Design of Statistics: Why Bayesian Statistics Better Fit the Culture and Incentives of HCI. CHI 2016, ACM, pp. 4521-4532.

[RS15] RSS (2015). Statistician or statistical scientist? an interview with RSS president Peter Diggle. StatsLife, Royal Statistical Society. 08 January 2015. https://www.statslife.org.uk/features/2822-statistician-or-statistical-scientist-an-interview-with-rss-president-peter-diggle

[SS10] Sense about Science (2010). Making Sense of Statistics. Sense about Science. in collaboration with the Royal Statistical Society. 29 April 2010. http://senseaboutscience.org/activities/making-sense-of-statistics/

[W17] Wikipedia (2017). Bayes factor. Wikipedia. Internet Archive at 26th July 2017. https://web.archive.org/web/20170722072451/https://en.wikipedia.org/wiki/Bayes_factor

[Wo15] Chris Woolston (2015). Psychology journal bans P values. Nature News, Research Highlights: Social Selection. 26 February 2015. http://www.nature.com/news/psychology-journal-bans-p-values-1.17001