from the real world to measurement and back again

from the real world to measurement and back again

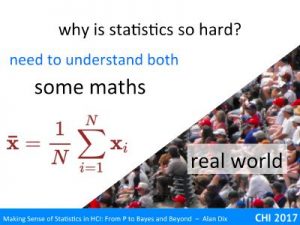

If you want to use statistics you need to learn how to do statistics, in the sense of working out what tests to use, maybe a stats package such as SPSS or R.

But why do this at all? What does statistics actually do?

Fundamentally statistics is about trying to learn dependable things about the real world based on measurements of it.

However, what we mean by ‘real’ is itself a little complicated, from the actual users you have tested to the hypothetical idea of a ‘typical user’ of your system.

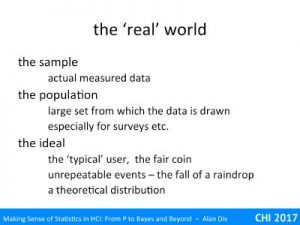

We’ll start with the real world, but what is it?

the sample – First of all there is the actual data you have: results from an experiment, responses from a survey, log data form a deployed application. This is the real world. The user you tested at 3pm on a rainy day in March, after a slightly overfilling lunch, did make precisely three errors and finished the task in 17 mins and 23 seconds. However, while this measured data is real, it is typically not what you meant to know. Would the same user on a different day, under different conditions have made the same errors? What about other users?

the population – Another idea of real, and one that may be what you really want to know, is when there is a larger group of people you want to now about, say all the people in your company, or all users of product A. What would be the average (and variation in) error arte if all of them sat down and used the software you are testing. Or as a more concrete kind of measurement, what is their average height?

The sample that you actually look at measure the heights of is real data, but yu are using to find out about the population as a whole.

the ideal – However, while this idea of the actual population is very concrete, often the real word you actually are interested in is slightly more nebulous. Even for the current uses of product A, you are not interested in the error rate if they tried your new software today, but if they did multiple times (maybe with the occasional memory wiping pill administered occasionally) over a period – that is a sort of ‘typical’ error rate when each uses the software.

Furthermore, it is not so much the actual set of users (not that you don’t care about them), but perhaps the typical user, especially for a new piece of software where you have no ‘real’ users yet.

Similarly, when you toss a coin you have an idea of the behaviour fair coin, that s not simply the complete collection of every coin in circulation. Even when you have tossed the coin, you can still think about the different ways it could have fallen, somehow reasoning about all possible past and present for an unrepeatable event.

Finally, this hypothetical ‘real’ event may be represented in a mathematically in a theoretical distribution such as the Normal distribution (for heights) or Binomial distribution (for coin tosses).

In practice you rarely need to voice these things explicitly, but occasionally you do need to think carefully about it. If you have done a series of consistent blood tests you may know something very important about a particular individual, but not patients in general. If you are analysing big data you may know something very precise about your current users, and how they behave given a particular social context, and particular algorithms in your system, but not necessarily about potential users and how they may behave if your algorithms and environment change.

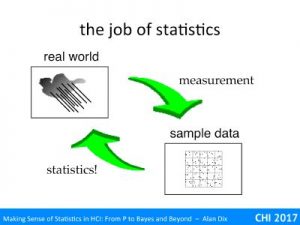

Once you know what the ‘real’ world you want to know about is, the job of statistics becomes clear.

You have taken some measurement, often of some sample of people and situations, and you want to use the measurements to understand the real world.

Given a sample of 20 heights of random people from your organisation, what can you infer about the heights of everyone? Given the error rates of 20 people on an artificial task in a lab, what can you tell about the behaviour of a typical user in their everyday situation?

As is evident, answering these questions requires a combination of probability and common sense – and this is the job of statistics.