When you take a measurement, whether it is the time for someone to complete a task using some software, or a preferred way of doing something, you are using that measurement to find out something about the ‘real’ world – the average time for completion, or the overall level of preference amongst you users.

When you take a measurement, whether it is the time for someone to complete a task using some software, or a preferred way of doing something, you are using that measurement to find out something about the ‘real’ world – the average time for completion, or the overall level of preference amongst you users.

Two of the core things you need to know is about bias (is it a fair estimate of the real value) and variability (how likely is it to be close to the real value).

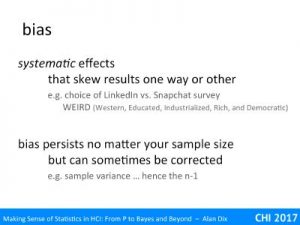

The word ‘bias’ in statistics has a precise meaning, but it is very close to its day-to-day meaning.

Bias is about systematic effects that skew your results in one way or another. In particular, if you use your measurements to predict some real world effect, is that effect likely to over or under estimate the true value; in other words, is it a fair estimate.

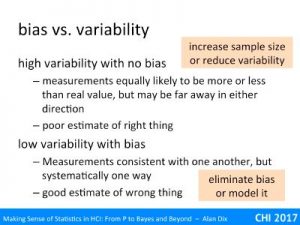

Say you take 20 users, and measure their average time to complete some task. You then use that as an estimate of the ‘true’ value, the average time to completion of all your users. Your particular estimate may be low or high (as we saw with the coin tossing experiments). However, if you repeated that experiment very many times would the average of your estimates end up being the true average?

If the complete user base were employees of a large company, and the company forced them to work with you, you could randomly select your 20 users, and in that case, yes, the estimate based on the users would be unbiased1.

However, imagine you are interested in popularity of Justin Bieber and issued a survey on a social network as a way to determine this. The effects would be very different if you chose to use LinkedIn or WhatsApp. No matter how randomly you selected users from LinkedIn, they are probably not representative of the population as a whole and so you would end up with a biased estimate of his popularity.

Crucially, the typical way to improve an estimate in statistics is to take a bigger sample: more users, more tasks, more tests on each user. Typically, bias persists no matter the sample size2.

However, the good news is that sometimes it is possible to model bias and correct for it. For example, you might ask questions about age or other demographics’ and then use known population demographics to add weight to groups under-represented in your sample … although I doubt this would work for the Justin Beiber example: if there are 15 year-old members of linked in, they are unlikely to be typical 15-year olds!

If you have done an introductory statistics course you might have wondered about the ‘n-1’ that occurs in calculations of standard deviation or variance. In fact this is precisely a correction of bias, the raw standard deviation of a sample slightly underestimates the real standard deviation of the overall population. This is pretty obvious in the case n=1 – imagine grabbing someone from the street and measuring their height. Using that height as an average height for everyone, would be pretty unreliable, but it is unbiased. However, the standard deviation of that sample of 1 is zero, it is one number, there is no spread. This underestimation is less clear for 2 or more, but in larger samples it persists. Happily, in this case you can precisely model the underestimation and the use of n-1 rather than n in the formulae for estimated standard deviation and variance precisely corrects for the underestimation.

If you toss 10 coins, there is only a one in five hundred chance of getting either all heads or all tails, about a one in fifty chance of getting only one head or one tails, the really extreme values are relatively unlikely. However, there about a one in ten chance of getting either just two heads or two tails. However, if you kept tossing the coins again and again, the times you got 2 heads and 8 tails would approximately balance the opposite and overall you would find that the average proportion of heads and tails would come out 50:50.

That is the proportion you estimate by tossing just 10 coins has a high variability, but is unbiased. It is a poor estimate of the right thing.

Often the answer is to just take a larger sample – toss 100 coins or 1000 coins, not just 10. Indeed when looking for infrequent events, physicists may leave equipment running for months on end taking thousands of samples per second.

You can sample yourself out of high variability!

Think now about studies with real users – if tossing ten coins can lead to such high variability; what about those measurements on ten users?

In fact for there may be time, cost and practicality limits on how many users you can involve, so there are times when you can’t just have more users. My ‘gaining power’ series of videos includes strategies including reducing variability for being able to obtain more traction form the users and time you have available.

In contrast, let’s imagine you have performed a random survey of 10,000 LinkedIn users and obtained data on their attitudes to Justin Beiber. Let’s say you found 5% liked Justin Beiber’s music. Remembering the quick and dirty rule3, the variability on this figure is about +/- 0.5%. If you repeated the survey, you would be likely to get a similar answer.

That’s is you have a very reliable estimate of his popularity amongst all LinkedIn users, but if you are interested in overall popularity, then is this any use?

You have a good estimate of the wrong thing.

As we’ve discussed you cannot simply sample your way out of this situation, if your process is biased it is likely to stay so. In this case you have two main options. You may try to eliminate the bias – maybe sample over a wide range of social network that between them offer a more representative view of society as whole. Alternatively, you might try to model the bias, and correct for it.

On the whole high variability is a problem, but has relatively straightforward strategies for dealing with. Bias is your real enemy!

- Assuming they behaved as normal in the test and weren’t annoyed at being told to be ‘volunteers’. [↩]

- Actually there are some forms of bias that do go away with large samples, called asymptotically unbiased estimators, but this does not apply in the cases where the way you choose your sample has created an unrepresentative sample, or the way you have set up your study favours one outcome. [↩]

- 5% of 10,000 represents 500 users. The square root of 500 is around 22, twice that a bit under 50, so our estimate of variability is 500+/–50, or, as a percentage of users, 5% +/– 0.5% [↩]