I am using HTML5’s offline mode as part of the Tiree Mobile Archive project.

This is, in principle, a lovely way of creating web sites that behave pretty much like native apps on mobile devices. However, things, as you can guess, do not always go as smoothly as the press releases and blogs suggest!

Some time I must write at length on various useful lessons, but, for now, just one – the potential for an endless cycle of caches, rather like Jörmungandr, the Norse world serpent, that wraps around the world swallowing its own tail.

Some time I must write at length on various useful lessons, but, for now, just one – the potential for an endless cycle of caches, rather like Jörmungandr, the Norse world serpent, that wraps around the world swallowing its own tail.

My problem started when I had a file (which I will call ‘shared.prob’ below, but was actually ‘place_data.js’), which I had updated on the web server, but kept showing an old version on Chrome no matter how many times I hit refresh and even after I went to the history settings and asked chrome to empty its cache.

I eventually got to the bottom of this and it turned out to be this Jörmungandr, cache-eats-cache, problem (browser bug!), but I should start at the beginning …

To make a web site work off-line in HTML5 you simply include a link to an application cache manifest file in the main file’s <html> tag. The browser then pre-loads all of the files mentioned in the manifest to create the application cache (appCache for short). The site is then viewable off-line. If this is combined with off-line storage using the built-in SQLite database, you can have highly functional applications, which can sync to central services using AJAX when connected.

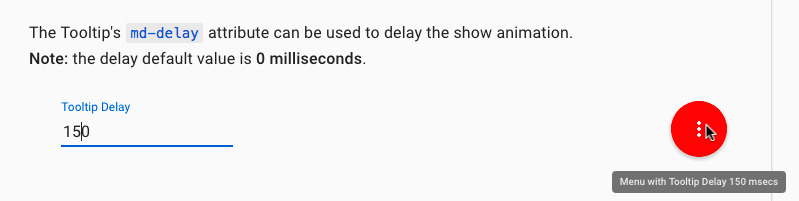

Of course sometimes you have updated files in the site and you would like browsers to pick up the new version. To do this you simply update the files, but then also update the manifest file in some way (often updating a version number or date in a comment). The browser periodically checks the manifest file when it is next connected (or at least some browsers check themselves, for some you need to add Javascript code to do it), and then when it notices the manifest has changed it invalidates the appCache and rechecks all the files mentioned in the manifest, downloading the new versions.

Great, your web site becomes an off-line app and gets automatically updated 🙂

Of course as you work on your site you are likely to end up with different versions of it. Each version has its own main html file and manifest giving a different appCache for each. This is fine, you can update the versions separately, and then invalidate just the one you updated – particularly useful if you want a frozen release version and a development version.

Of course there may be some files, for example icons and images, that are relatively static between versions, so you end up having both manifest files mentioning the same file. This is fine so long as the file never changes, but, if you ever do update that shared file, things get very odd indeed!

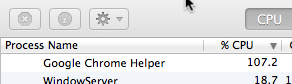

I will describe Chrome’s behaviour as it seems particularly ‘aggressive’ at caching, maybe because Google are trying to make their own web apps more efficient.

First you update the shared file (let’s call it shared.prob), then invalidate the two manifest files by updating them.

Next time you visit the site for appCache_1 Chrome notices that manifest_1 has been invalidated, so decides to check whether the files in the manifest need updating. When it gets to shared.prob it is about to go to the web to check it, then notices it is in appCache_2 – so uses that (old version).

Now it has the old version in appCache_1, but thinks it is up-to-date.

Next you visit the site associated with appCache_2, it notices manifest_2 is invalidated, checks files … and, you guessed it, when it gets to shared.prob, it takes the same old version from appCacche_1 🙁 🙁

They seem to keep playing catch like that for ever!

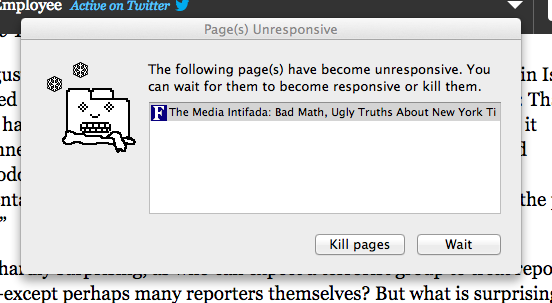

The only way out is to navigate to the pseudo-url ‘chrome://appcache-internals/’, which lets you remove caches entirely … wonderful.

But don’t know if there is an equivalent to this on Android browser as it certainly seems to have odd caching behaviour, but does seem to ‘sort itself out’ after a time! Other browsers seem to temporarily have problems like this, but a few forced refreshes seems to work!

For future versions I plan to use some Apache ‘Rewrite’ rules to make it look to the browser that the shared files are in fact to completely different files:

RewriteRule ^version_3/shared/(.*)$ /shared_place/$1 [L]

To be fair the cache cycle more of a problem during development rather than deployment, but still … so confusing.

Useful sites:

These are some sites I found useful for the application cache, but none sorted everything … and none mentioned Chrome’s infinite cache cycle!

- http://www.w3.org/TR/2008/WD-html5-20080122/#appcache

The W3C specification – of course this tell you how appCache is supposed to work, not necessarily what it does on actual browsers!

- http://www.html5rocks.com/en/tutorials/appcache/beginner/

It is called “A Beginner’s Guide to using the Application Cache”, but is actually pretty complete.

- http://appcachefacts.info

Really useful quick reference, but: “FACT: Any changes made to the manifest file will cause the browser to update the application cache.” – don’t you believe it! For some browsers (Chrome, Android) you have to add your own checks in the code (See “Updating the cache” section in “A Beginner’s Guide …”).).

- http://manifest-validator.com/

Wonderful on-line manifest file validator checks both syntax and also whether all the referenced files download OK. Of course it cannot tell whether you have included all the files you need to.

Story 2. Nearly all worldwide ATM transactions are routed through systems that include COBOL code (‘natural language’ programming of the 1960s) … happily IBM still do support CICS, but there is concern that COBOL expertise is literally dying out.

Story 2. Nearly all worldwide ATM transactions are routed through systems that include COBOL code (‘natural language’ programming of the 1960s) … happily IBM still do support CICS, but there is concern that COBOL expertise is literally dying out. Story 2. Nearly all worldwide ATM transactions are routed through systems that include COBOL code (‘natural language’ programming of the 1960s) … happily IBM still do support CICS, but there is concern that COBOL expertise is literally dying out.

Story 2. Nearly all worldwide ATM transactions are routed through systems that include COBOL code (‘natural language’ programming of the 1960s) … happily IBM still do support CICS, but there is concern that COBOL expertise is literally dying out.