Although they are both founded in probability theory, traditional statistics and Bayesian statistics have fundamental philosophical differences in the way they treat uncertainty. Bayesian methods demand the uncertainty is quantified, whereas traditional methods accept this uncertainty and reason form that. However, in practice our knowledge is somewhere between complete ignorance and precise probability, and both methods have ways of dealing with this in-between knowledge.

Although they are both founded in probability theory, traditional statistics and Bayesian statistics have fundamental philosophical differences in the way they treat uncertainty. Bayesian methods demand the uncertainty is quantified, whereas traditional methods accept this uncertainty and reason form that. However, in practice our knowledge is somewhere between complete ignorance and precise probability, and both methods have ways of dealing with this in-between knowledge.

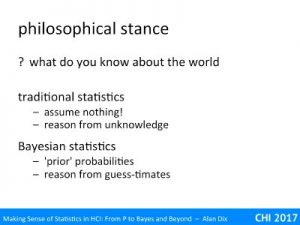

We have seen that both traditional statistics and Bayesian statistics effectively start with the same underlying data, and in many circumstances yield effectively equivalent results. However, they adopt fundamentally different philosophical stance in the way that they sue that data to answer questions about the world. These philosophical differences are critical in interpreting their results.

Traditional statistics effectively assumes nothing about the world: are there Martians or not, is your new design better than the old one or not, it is not so much neutral as takes no sides at all. It then seeks to reason from that state of unknowledge.

Bayesian statistics instead asks you to quantify that unknowledge into prior probabilities, and then reasons in an apparently mathematically clean way, but based on those guestimates.

In some ways traditional statistics is post-modern accepting uncertainty and leaving it even in the eventual interpretation of the results, whereas Bayesian statistics suggest a more closed world. However, with Bayesian stats the uncertainty is still there, just encapsulated in the guestimate of the prior.

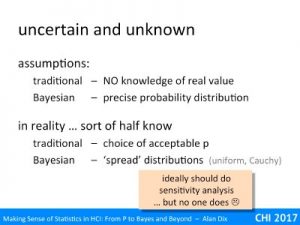

On the surface they have radically different assumtoiosn about the unknown features of the real world. Traditional statistics assumes no knowledge of the real value, whereas Bayesian statistics assumes a precise porbailty dustriution.

However, neither the world, not the statistics we use to make sense of it, are as clear-cut.

Typically we have some knowledge about the likelihood (in the day to day sense) of things: you are pretty unlikely to encounter Martians; the coin you’ve pulled from your pocket is likely to be fair; that new design for the software, you’ve put a lot of effort into creating should be better than the old system. However, typically we do not have a precise measure of that knowledge.

In their purest form traditional statistics entirely ignores that knowledge and Bayesian statistics asks you to make it precise in a way that goes beyond you actual knowledge, turning uncertainty into precise probability. The former ignores information, the latter forces you to invent it!

In practice, both techniques are a little more nuanced.

In traditional statistics the significance level you are willing to accept as good evidence (p<5%, p<1%) often reflects your prior beliefs: you will probably need a very high level before you really call the Men in Black, or even accept that the coin may be loaded. Effectively there is a level of Bayesian reasoning applied during interpretation.

Similarly, while Bayesian statistics demands a precise prior probability distribution, in practice often uniform or other forms of very ‘spread’ priors are used, reflecting the high degree of uncertainty. Ideally it would be good to try a number of priors to obtain a level sensitivity analysis, rather as we did in the example, but I have not seen this done in practice., possibly as it would add another level of interpretation to explain!