We often deal with survey or count data. This might come in public forms such as opinion poll data preceding an election, or from your own data when you email out a survey, or count kinds of errors in a user study.

We often deal with survey or count data. This might come in public forms such as opinion poll data preceding an election, or from your own data when you email out a survey, or count kinds of errors in a user study.

So when you find that 27% of the users in your study had a problem, how confident do you feel in using this to estimate the level of prevalence amongst users in general? If you did a bigger study wit more users would you be surprised if the figure you got was actually 17%, 37% or 77%?

You can work out precise numbers of this, but in this video I’ll give a simple rule of thumb method for doing a quick estimate.

We’re going to deal with this by looking at three separate cases.

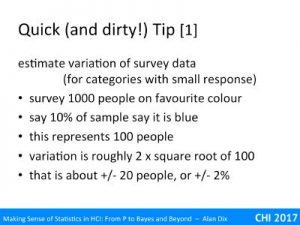

First when the number you are dealing with is a comparatively small proportion of the overall sample.

For example, assume you want to know about people’s favourite colours. You do a survey of 1000 people at 10% say their favourite colour is blue. The question is how reliable this figure is. If you had done a larger survey, would the answer be close to 10% or could it be very different?

The simple rule is that the variation is 2 x the square root number of people who chose blue.

To work this out first calculate how many people the 10% represents. Given the sample was 1000, this is 100 people. The square root of 100 is 10, so 2 times this is 20 people. You can be reasonably confident (about 95%) that the number of people choosing blue in your sample is within +/- 20 of the proportion you’d expect from the population as a whole. Dividing that +/-20 people by the 1000 sample, the % of people for whom blue is their favourite colour is likely to be within +/- 2% of the measured 10%.

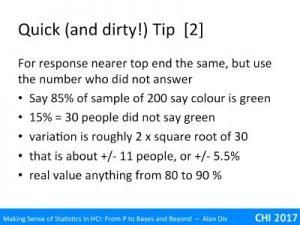

The second case is when you have a large majority who have selected a particular option.

For example, let’s say in another survey, this time of 200 people, 85% said green was their favourite colour.

This time you still apply the “2 x square root” rule, but instead focus on the smaller number, those who didn’t choose green. The 15% who didn’t choose green is 15% of 200 that is 30 people. The square root of 30 is about 5.5, so the expected variability is about +-11, or in percentage terms about +/- 5.5%.

That is the real proportion over the population as a whole could be anywhere between 80% and 90%.

Notice how the variability of the proportion estimate from the sample increases as the sample size gets smaller.

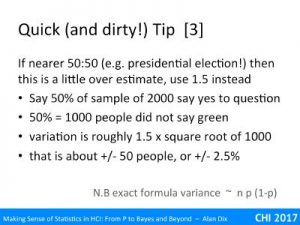

Finally if the numbers are near the middle, just take the square root, but this time multiply by 1.5.

For example, if you took a survey of 2000 people and 50% answered yes to a question, this represents 1000 people. The square toot of 1000 is a bit over 30, so 1.5 times this is around 50 people, so you expect a variation of about +/- 50 people, or about +/- 2.5%.

Opinion polls for elections often have samples of around 2000, so if the parties are within a few points of each other you really have no idea who will win.

For those who’d like to understand the detailed stats for this (skip if you don’t!) …

These three cases are simplified forms of the precise mathematical formula for the variance of a Binomial distribution np(1-p), where n is the number in the sample and p the true population proportion for the thing you are measuring. When you are dealing with fairly small proportions the 1-p term is close to 1, so the whole variance is close to np, that is the number with the given value. You then take the square root to give the standard deviation. The factor of 2 is because about 95% of measurements fall within 2 standard deviations. The reason this becomes 1.5 in the middle is that you can no longer treat (1-p) as nearly 1, and for p =0.5, this makes things smaller by square root of 0,5, which is about 0.7. Two times 0,7 is (about) one and half (I did say quick and dirty!).

However, for survey data, or indeed any kind of data, these calculations of variability are in the end far less critical than ensuring that the sample really does adequately measure the thing you are after.

Is it fair? – Has the way you have selected people made one outcome more likely. For example, if you do an election opinion poll of your Facebook friends, this may not be indicative of the country at large!

For surveys, has there been self-selection? – Maybe you asked a representative sample, but who actually answered. Often you get more responses from those who have strong feelings about the issue. For usability of software, this probably means those who have had a problem with it!

Have you phrased the question fairly? – For example, people are far more likely to answer “Yes” to a question, so if you ask “do you want to leave?” you might get 60% saying “yes” and 40% saying no, but if you asked the question in the opposite way “do you want to stay?”, you might still get 60% saying “yes”.

Pingback: Sampling Bias – a tale of three Covid news stories | Alan Dix