In response to a Facebook thread about my recent LSE Impact Blog, “Evaluating research assessment: Metrics-based analysis exposes implicit bias in REF2014 results“, Joe Marshall commented,

“Citation databases are a pain, because you can’t standardise across fields. For computer science, Google scholar is the most comprehensive, although you could argue that it overestimates because it uses theses etc as sources. Scopus, web of knowledge etc. all miss out some key publications which is annoying”

My answer was getting a little too complicated for a Facebook reply; hence a short blog post.

While for any individual paper, you get a lot of variation between Scopus and Google Scholar, from my experience with the data, I would say they are not badly correlated if you look at big enough units. There are a few exceptions, notably bio-tech papers which tend to get more highly placed under Scopus than GS.

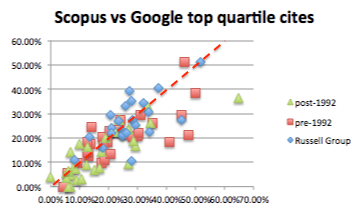

Crucial for REF is how this works at the level of whole institution data. I took a quick peek at the REF institution data, comparing top quartile counts for Scopus and Google Scholar. That is, the proportion of papers submitted from each institution that were in top 25% of papers when ranked by citation counts. Top quartile is chosen as it should be a reasonably predictor of 4* (about 22% of papers).

The first of these graphs shows Scopus (x-axis) vs Google Scolar (y-axis) for whole institutions. The red line is at 45 degree, representing an exact match. Note that, many institutions are relatively small, so we would expect a level of spread.

While far from perfect, there is clustering around the line and crucially for all types of institution. The major outlier (green triangle to the right) is Plymouth which does have a large number of biomed papers. In short, while one citation metric might be better than the other, they do give roughly similar outcomes.

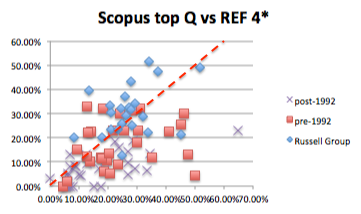

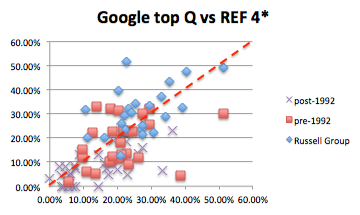

This is very different from what happens in you compare either with actual REF 4* results:

In both cases not only is there far less agreement, but also there are systematic effects. In particular, the post-1992 institutions largely sit below the red line; that is they are scored far less highly by REF panel than by either Scopus or Google Scholar. This is a slightly different metric, but precisely the result I previously found looking at institutional bias in REF.

Note that all of these graphs look far tighter if you measure GPA rather than 4* results, but of course it is 4* that is largely what is funded.

Thanks so much for doing this work!!