Years ago I wrote a short CHI paper with Roberta Mancini and Stefano Levialdi “communication, action and history” all about the differences between language and action, but for the second time in a few weeks I am writing about the links. But of course there are both similarities and differences.

In my recent post about “language and action: sequential associative parsing“, I compared the role of semantics in the parsing of language with the similar role semantics plays in linking disparate events in our interpretation of the world and most significantly the actions of others. The two differ however in that language is deliberative, intentionally communicative, and hence has a structure, a rule-iness resulting from conventions; it is chosen to make it easier for the recipient to interpret. In contrast, the events of the world have structure inherent in their physical nature, but do not structure themselves in order that we may interpret them, their rule-iness is inherent not intentional. However, the actions of other people and animals often fall between the two.

In this post I will focus in on individual actions of creatures in the world and the way that observing others tells us about their current activities and even their intended actions, and thus how these observations becomes a resource for planning our own actions. However, our own actions are also the subject of observation and hence available to others. We may deliberately hide or obfuscate our intentions and actions if we do not wish others to ‘read’ what we are doing; however, we may also exaggerate them, making them more obvious when we are collaborating. That is, we shape our actions in the light of their potential observation by others so that they become an explicit communication to them.

This exaggeration is evident in computer environments and the physical world, and may even be the roots of iconic gesture and hence language itself.

The CSCW Framework

In the early 1990s I began to use variants of the following diagram as a way to understand the different kinds of systems found in groupware and CSCW research. It has proved useful in many papers and notably in the groupware chapter of the HCI textbook.

communication through the artefact

(from “Computer Supported Cooperative Work – A Framework“).

At the top, the two circles labelled ‘P’ represent participants in a collaborative interaction. Although there are two they are taken to represent any number in a group1. The ‘A’ represents the ‘artefacts of work’, the things people are creating or working with in order to achieve their collaborative purpose. The simple diagram helps to distinguish direct communication from the control of and feedback from shared artefacts, and also to discuss the role of diexis (and even barcodes!) in collaborative work.

Most important for the current argument is that the actions of one participant on shared artefacts can often be observed by the other participants. That is, in addition to feedback of one’s own actions, one obtains feedthrough about the actions of others. This creates a path of communication through the artefact in addition to those of direct communication.

The framework was formulated to discuss computer-mediated interactions, so the core examples of shared artefacts were shared documents, diaries and files; now-a-days it would be Google docs, but at the time it was various collaborative authoring and editing tools such as DistEdit2 and Quilt3.

However, the framework diagram was typically illustrated through the same kinds of interactions on physical world objects, and most often moving furniture.

“Compare two persons moving an object, say a piano, by hand with them moving it with the aid of two (small) forklift trucks. … In the manual case, much of the time you hear little (except grunts). As they manoeuvre it round corners, one of them (probably the one in front) may tip it and the other, feeling the movement, moves in concert. …”

extract from “Computer Supported Cooperative Work – A Framework“.

Our skills in understanding actions on virtual artefacts are inherited from our real world interactions.

So, let’s move from the computer systems of the 21st century and take ourselves to a place far away from all technology, or maybe back to a simpler time before computers.

Conflict and camouflage

Somewhere on an African plain a lioness watches a zebra. The zebra has more meat than the many bucks that roam freely and would make a better meal for her cubs, but it is fast and has sufficient stamina to outrun her in a long chase. If the lioness makes a dash from too far away, the zebra will escape and the meal is lost. So the lioness slowly moves through the grass, hoping to get close enough to go for the kill. Meanwhile the zebra has noticed the lioness, but the grass is good and the lioness may not be hungry or may have had a recent kill. It is better to stay around and eat the good grass than to run unnecessarily. So the zebra watches the lioness, but tries not to show that it is doing so. The lioness is also watching for signs that the zebra has taken fright. She is trying to get as close to possible, but if the zebra starts to run she will chase it; the slightest movement that looks like flight is her trigger to move.

Each animal is watching the other; reading the movements of action and looking for subtle signs of the intention to act. But they are in conflict, their goals diverge in the extreme, so they obfuscate and hide both action and intention. Some of this is played out in the moment, the lioness holding her body low amongst the grass. Some has been set by millennia of adaptations: the golden coat of the lioness is almost invisible amongst the sun-scorched grass and red-oxide earth; the stripes of the zebra confuse its form against the bush.

Collaboration and communication

Now let’s shift our attention to the Siberian snows. A pack of wolves are stalking a herd of deer and the deer take fright. The younger, weaker and slower deer are evident, and as the chase begins the pack leader spots a likely prey and runs for it. One of its pack mates sees the wolf’s movements, and that the deer, while weak, is making a break; so it heads off the deer turning it to bay and the rest of the pack moves in.

Again the animals read each other’s movement and the subtle signs of action and intention. However, here the pack needs to act in concert, they are collaborating in the kill and so it is to their advantage if the visible (and aural) signs of their actions and intentions are clear to one another. Indeed before the chase begins the pack leader may have identified the likely target and the rest of the pack seen this through the direction of gaze. A class of hunting dogs are called ‘pointers’ because they literally point their noses towards prey; the modern hunter with green wellies and shotgun is reading their signs just as the pack mates of their ancestors did thousands of years before.

In some ways the reading of the actions of the other wolves is just like the lioness reading the actions and intentions of the zebra, or vice versa. However, because the lioness and zebra are in conflict, they each seek (whether intentionally, or instinctively) to obfuscate the signs of their actions. In contrast, the wolf pack are cooperating and so while they may need to obfuscate their intentions from the deer, it is to their advantage to make these clear to one another.

Like the lioness and the zebra, this may include long-term changes in their appearance, but certainly changes in instinctive behaviours. The ‘pointing’ of pointer dogs is tapping into instincts. However, the form of this is particular. It is natural for the act of seeking out and staking prey that the dog (or its wolf ancestor) looks at the selected target. The other animals are attuned to these natural signs. However, at some point the ‘looking at’ becomes exaggerated beyond what is essential for the action itself; it is just like the original action, but ‘more so’. So without any change in the perceptions of others a form of message is given, the sign becomes a signal4.

Of course, the process is symmetric, over time animals adapt to not only notice the ordinary signs of action, but to especially notice the exaggerated movements signalling intentions. Sometimes the exaggerated signal becomes divorced from the original signs and takes on a life of its own5. This is especially evident in mating rituals, which are not moderated by the need to obfuscate actions from prey.

Cooperation in conflict

Some conflict situations, particularly predator-prey, are zero sum; there is a winner and a loser and no middle ground. However, in other situations, between animals competing for food, or between males competing for leadership of herd or pack. There is a zero sum competition for the food or position, but it is in both competitors’ interests to avoid actually fighting, which will hurt both.

This young bull elephant below clearly means business: the extended ears say, “I’m getting upset, I don’t want you near”. However, it is not in the interest of the elephant to charge a car, nor in the interests of the occupants to stay around. We drove away!

In this case the elephant and the car occupants are cooperating to prevent a conflict about territory becoming a physical confrontation. In this case the elephant is using the same signal of raised ears as he would use to another elephant or animal. It is partly a deception, making the elephant look bigger than it is (it wants to win in the conflict!), but also a signal “I don’t want to fight but I will”, which is understood by other elephants … and car drivers.

Towards gesture

Of course as deliberative creatures, we humans not only instinctively act, but also do so deliberatively, with forethought and consideration.

Some of this we learn without being aware that we do so. The baby may cry because it is hurting and the mother comforts it, but later may cry to obtain attention. Watch a small child who has fallen over and bursts into tears. The tears will often abruptly stop if no one notices. It is not that there is no hurt, nor that the tears are purely a show, but they are exaggerated to become a signal to the parent, a message, not just a sign of the pain.

As adults our behaviours are full of these unconscious signals: smiles and frowns, looking at our wrist (even if we do not have a watch) if it feels time to leave, or slightly exaggerated shuffling of papers when a meeting has gone on too long.

The borders between this and more thought-out deliberate action is blurred. Certainly politicians and seniors managers both naturally give out these signals, but also may learn explicitly how to read and how to create these impressions, from shoulder pads to the power stare.

A few years ago I was putting a note on my door as I was leaving my office for a few minutes, but knew a visitor was due. I suddenly noticed myself adjusting the note to make it less vertical. This was not a conscious action, but clearly deliberate. On reflection I realised that the door was covered in various permanent posters and notices. There was clearly a danger that the visitor might knock at the door and never notice the temporary note amongst this background. However, a temporary note will typically be put up in a hurry, and will not be carefully aligned; so its disposition6, its skew-whiff angle, will act as a sign to the visitor that it is temporary and so worth reading. Without explicitly realising it, I was exaggerating this sign of temporariness. Now that I know (explicitly) the ‘trick’ I deliberately do this; but note the unconscious complexity of every-day thought that was achieving this without my awareness.

This is not a one-off phenomena. I have also found myself leaving my office light on when I leave the office as a signal that I am ‘in’. Again emulating what could be an accidental sign of presence … albeit not very environmentally sound.

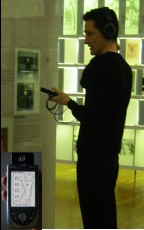

One of the Equator experiments took place in the Mack Room, a gallery in the Lighthouse arts centre in Glasgow7. The experiment linked three participants; one was in the Mack room itself with a PDA tracked using ultrasonic sensors, the second was using desktop VR navigating a model of the room, and the third was using plain web pages. They were all able to speak to one another through microphones, and each could see a representation of themselves and the others in a map view.

Even when still, the person in the room would sway slightly (and maybe the ultrasonic sensors had some noise), so there was a slight movement of the avatar, but the avatar of the VR participant was rock steady as soon as hands left the cursor keys (used for moving). After a while the VR participant was seen to periodically ‘wiggle’, deliberately using small cursor movements to draw attention to speech or location by emulating the natural wiggle of a standing person.

Onomatopoeic action

These effects are similar to what I used to call onomatopoeic action. When you are driving down the road and want to turn right at the next junction you may gradually move the car to the middle of the road. In a wide road this is the correct road position. However, if the road is narrow, then you need more room to turn and the appropriate road position to start the manoeuvre is on the opposite side of the road. However, even in such situations, one often initially moves to the centre, and only at the last minute sweeping to the left then making the right turn. Why?

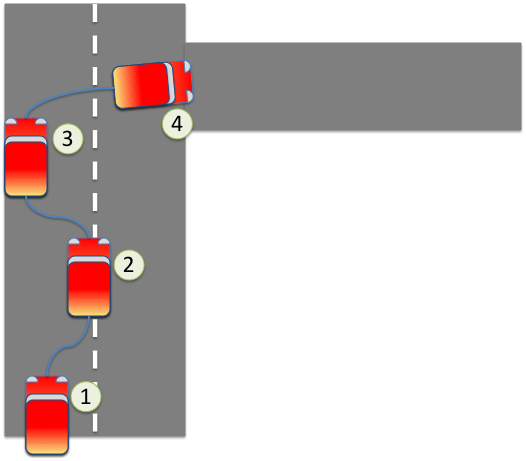

Turning right into a narrow road:

(1) normal road position, (2) initially move towards centre of road, then

(3) move to the left ready for (4) swing into the narrow entrance.

The initial movement to the centre (labelled (2) in the diagram) is not necessary for the action of turning, but is a signal to other road users that you intend to turn right. Obviously you can use your indicators as well, but we often trust our reading of other driver’s road position more than explicit signals and the person behind seeing someone pull over to the left might mistake it for stopping and erroneously and dangerously decide to overtake just you make your turn 🙁

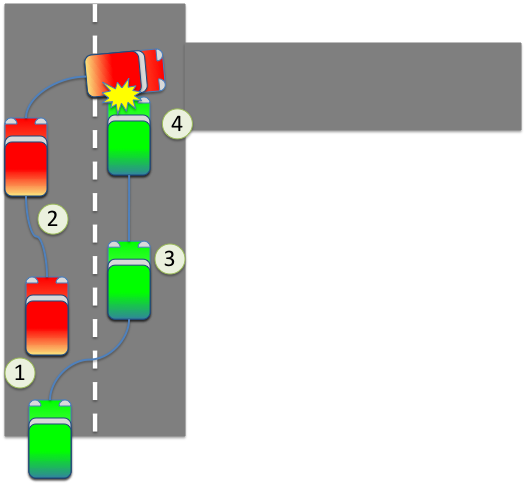

Things go wrong: (1) normal road position with car following,

(2) move to left ready for manoeuvre, following car assumes you are stopping so

(3) begins to overtake at which point you complete your manoeuvre turning right

and … (4) boom!

Words, like ‘boom’ or ‘tinkle’, that imitate in their sound what they denote in meaning are called onomatopoeic. The action of moving into the middle of the road conveys in its form (rightness) the intended future action (turning right), so can be thought of as an onomatopoeic action. The skew-whiff placement of notes on the door, leaving the light on, looking at a non-existent watch all have this onomatopoeic element.

To language: from exaggeration to representation

Note that the development of the ‘move to the centre to signal a right turn’ action will almost certainly have started out as exaggeration. On a wide road it is not necessary to move to right or left, but simply to turn from the normal road position. However, the movement to the centre begins the action ahead of time, exaggerating it. It is the start of the actual action, but also emphasises it to others.

By the time we have the right turn in the narrow road, the action of moving to the centre of the road has become divorced from the actual action of turning right. It is representative of or maybe even symbolic of the action of turning right, but is not actually a part of the action (which really needs a position to the left).

This is similar to the runaway mating displays of birds and other animals, but whereas these are solely instinctive, our own actions of this kind often mix reactive and deliberative elements.

This is reminiscent of the idea of accountability in ethnomethodology: as we act in a social situation we know that our actions and activities are available to others and must therefore be meaningful to others within the particular cultural and social settings. Similarly Goffman regarded day-to-day activity as a performance; just as an actor performs on-stage, we perform moment to moment for those around us and often for ourselves. However, while the actor emulates fictive actions, when we perform in an exaggerated way we effectively emulate what we are doing anyway!

The important shift occurs at the moment when we no longer emulate what we are doing, but emulate in order to convey what we may be about to do. We then move from observable actions to representative gestures and surely the beginnings of symbolic language.

- Note the choice of two participants in the CSCW framework diagram seemed unproblematic, until some years later I realised it had limited the scope of analysis of awareness. A third participant is needed in order to talk about the way one person is aware of the communications or collaborative actions of others. The diagram had proved incredibly powerful in suggesting questions and issues, but by its nature had precluded others; good lesson about any diagram, notation or system of thought![back]

- Knister, M.J. and Prakash, A., (1990), DistEdit: a distributed toolkit for supporting multiple group editors, in CSCW’90 Proceedings of the Conference on Computer-Supported Cooperative Work, ACM SIGCHI & SIGOIS, 343-355[back]

- Leland, M.D.P., Fish, R.S. and Robert E, K., (1988), Collaborative document production using Quilt, in Proceedings Of CSCW’88, 206-215[back]

- I am aware that I am using the words ‘sign’ and ‘signal’ in nearly the opposite way to those used in some branches of semiotics. However, the sense here is the normal sense in the English language, where one talks about the crocus being a sign of spring, or smoke is a sign of fire, but smoke deliberately covered and uncovered to create a characteristic pattern is a signal. In ordinary language a signal suggest intention, something emitted deliberately to be noticed, the possession of the sender. In contrast, signs are noticed, but not necessarily intended; indeed the thing that has a sign may not even be animate; the meaning of a sign belongs to the recipient. In fact, doing a quick check it seems that in Pierce’s semiotics, ‘sign’ is very close to the meaning here.[back]

- I’m not an expert on Pierce, but I think he would refer to the point at which the sign becomes detached from its ‘natural’ connection to the action as the change from sinsign to legisign.[back]

- We have used the term ‘disposition’ is used in previous work, such as “Trigger Analysis – understanding broken tasks” and “Artefacts as designed, Artefacts as used: resources for uncovering activity dynamics“. Often the location of an artefact is important (papers placed on a chair for reminders, or in a particular position on a desk). However, often the way in which it is placed in that location is also significant – the disposition (straight or at an angle, front-side up or down). [back]

- See the Equator pages about the Mack Room project also the Mackintosh Interpretation Centre web site, and the HCI book case study on the Mack Room.[back]