I just noticed the following excerpt in the web page describing a rich-text editing component:

Supported Browsers (Confirmed)

… list …

Note: This list is now out of date and some new browsers such as Safari 3.0+ and Opera 9.5+ suffer from some issues.

(Free Rich Text Editor – www.freerichtexteditor.com)

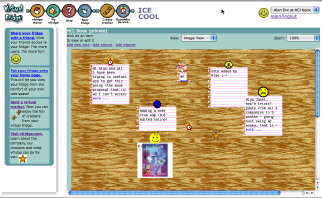

In odd moments I have recently been working on bringing vfridge back to life. Partly this is necessary because the original Java Servlet code was such a pig1, but partly because the dynamic HTML code had ‘died’. To be fair vfridge was produced in the early days of DHTML, and so one might expect things to change between then and now. However, reading the above web page about a component produced much more recently, I wonder why is it that on the web, and elsewhere, we are so bad at being backward compatible … and I recall my own ‘pain and tears‘ struggling with broken backward compatibility in office 2008.

In odd moments I have recently been working on bringing vfridge back to life. Partly this is necessary because the original Java Servlet code was such a pig1, but partly because the dynamic HTML code had ‘died’. To be fair vfridge was produced in the early days of DHTML, and so one might expect things to change between then and now. However, reading the above web page about a component produced much more recently, I wonder why is it that on the web, and elsewhere, we are so bad at being backward compatible … and I recall my own ‘pain and tears‘ struggling with broken backward compatibility in office 2008.

I’d started looking at current rich text editors after seeing Paul James’ “Small, standards compliant, Javascript WYSIWYG HTML control“. Unlike many of the controls that seem to produce MS-like output with <font> tags littered randomly around, Paul’s control emphasises standards compliance in HTML, and is using the emerging de-facto designMode2 support in browsers.

This seems good, but one wonders how long these standards will survive, especially the de facto one, given past history; will Paul James’ page have a similar notice in a year or two?

The W3C approach … and a common institutional one … is to define unique standards that are (intended to be) universal and unchanging, so that if we all use them everything will still work in 10,000 years time. This is a grand vision, but only works if the standards are sufficiently:

- expressive so that everything you want to do now can be done (e.g. not deprecating the use of tables for layout in the absence of design grids leading to many horrible CSS ‘hacks’)

- omnipotent so that everyone (MS, Apple) does what they are told

- simple so that everyone implements it right

- prescient so that all future needs are anticipated before multiple differing de facto ‘standards’ emerge

The last of those is the reason why vfridge’s DHTML died, we wanted rich client-side interaction when the stable standards were not much beyond transactions; and this looks like the reason many rich-text editors are struggling now.

A completely different approach (requiring a degree of humility from standards bodies) would be to accept that standards always fall behind practice, and design this into the standards themselves. There needs to be simple (and so consistently supported) ways of specifying:

- which versions of which browsers a page was designed to support – so that browsers can be backward or cross-browser compliant

- alternative content for different browsers and versions … and no the DTD does not do this as different versions of browsers have different interpretations of and bugs in different HTML variants. W3C groups looking at cross-device mark-up already have work in this area … although it may fail the simplicty test.

Perhaps more problematically, browsers need to commit to being backward compatible where at all possible … I am thinking especially of the way IE fixed its own broken CSS implementation, but did so in a way that broke all the standard hacks that had been developed to work around the old bugs! Currently this would mean fossilising old design choices and even old bugs, but if web-page meta information specified the intended browser version, the browser could selectively operate on older pages in ways compatible with the older browsers whilst offering improved behaviour for newer pages.

- The vfridge Java Servlets used to run fine, but over time got worse and worse; as machines got faster and JVM versions improved with supposedly faster byte-code compilers, strangely the same code got slower and slower until it now only produces results intermittently … another example of backward compatibility failing.[back]

- I would give a link to designMode except that I notice everyone else’s links seem to be broken … presumably MSDN URLs are also not backwards compatible 🙁 Best bet is just Google “designMode” [back]