How a child’s puzzle game gives insight into more human-like explanations of AI decisions

Many of you will have played Mastermind, the simple board game with coloured pegs where you have to guess a hidden pattern. At each turn the person with the hidden pattern scores the challenge until the challenger finds the exact colours and arrangement.

As a child I imagined a variant, “Cheat Mastermind” where the hider was allowed to change the hidden pegs mid-game so long as the new arrangement is consistent with all the scores given so far.

This variant gives the hider a more strategic role, but also changes the mathematical nature of the game. In particular, if the hider is good at their job, it makes it a worst case for the challenger if they adopt a minimax strategy.

More recently, as part of the TANGO project on hybrid human-AI decision making, we realised that the game can be used to illustrate a key requirement for explainable AI (XAI). Nick Chater and Simon Myers at Warwick have been looking at theories of human-to-human explanations and highlighted the importance of coherence, the need for consistency between explanations we give for a decision now and future decisions. If I explain a food choice by saying “I prefer sausages to poultry“, you would expect me to subsequently choose sausages if given a choice.

Cheat Mastermind captures this need to make our present decisions consistent with those in the past. Of course in the simplified world of puzzles this is a perfect match, but in real world decisions things are more complex. Our explanations are often ‘local’ in the sense they are about a decision in a particular context, but still, if future decisions disagree wit earlier explanations, we need to be able to give a reason for the exception: “turkey dinners at Christmas are traditional“.

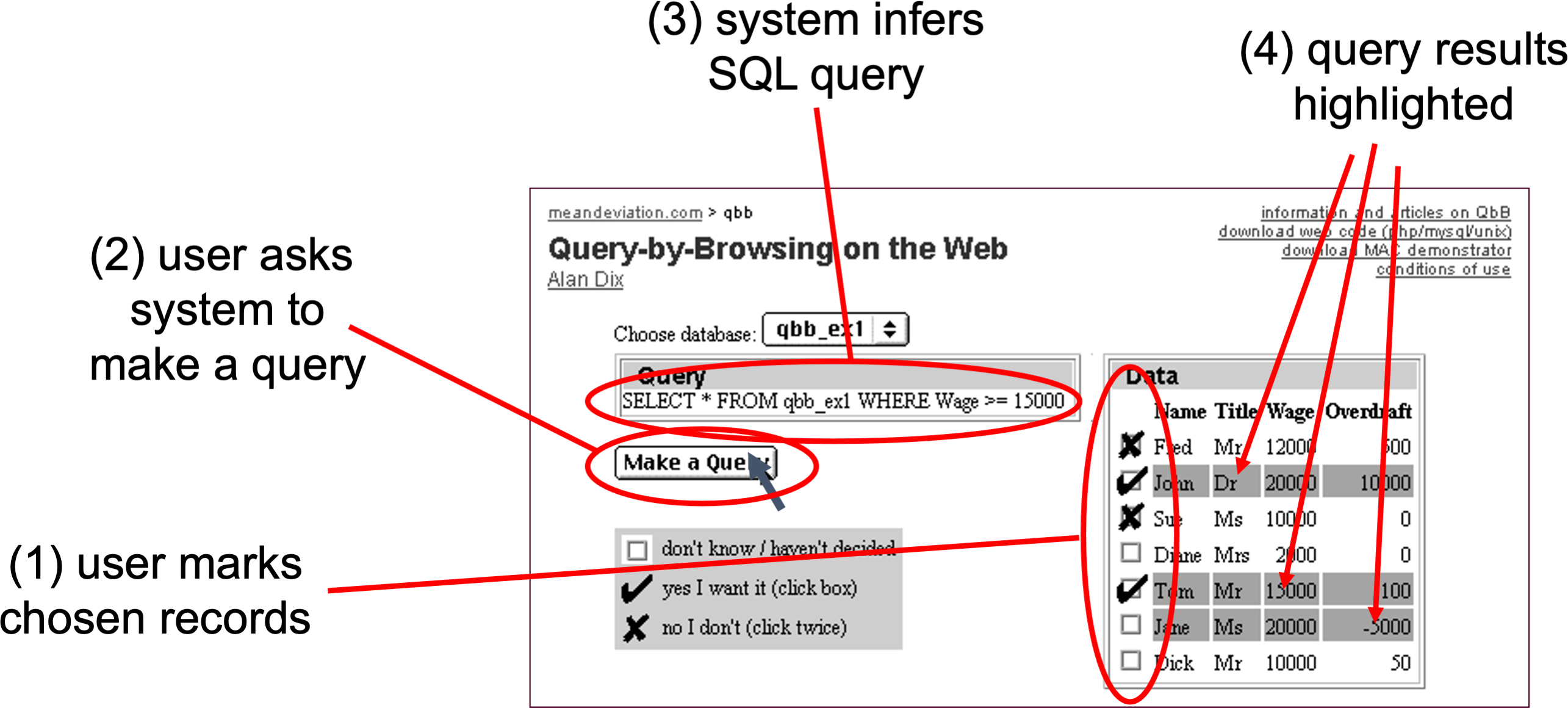

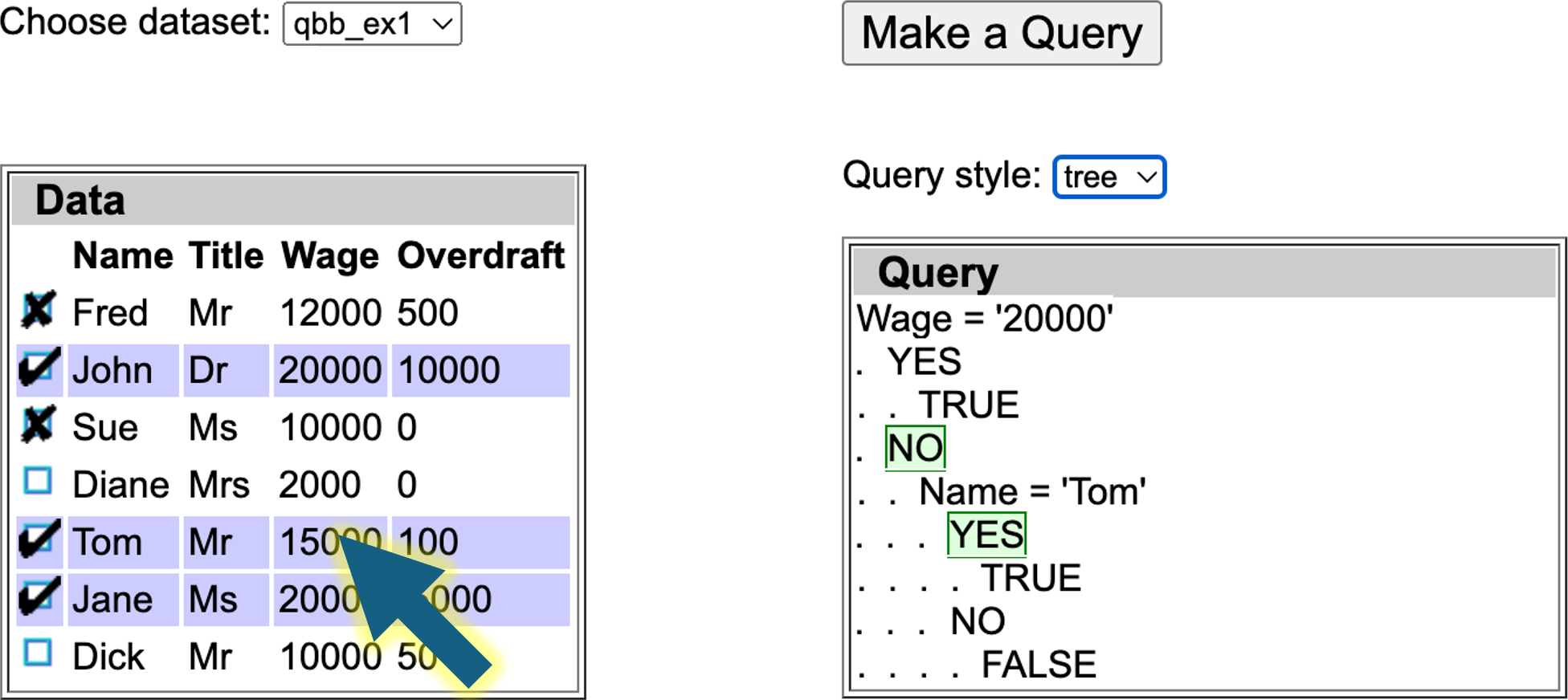

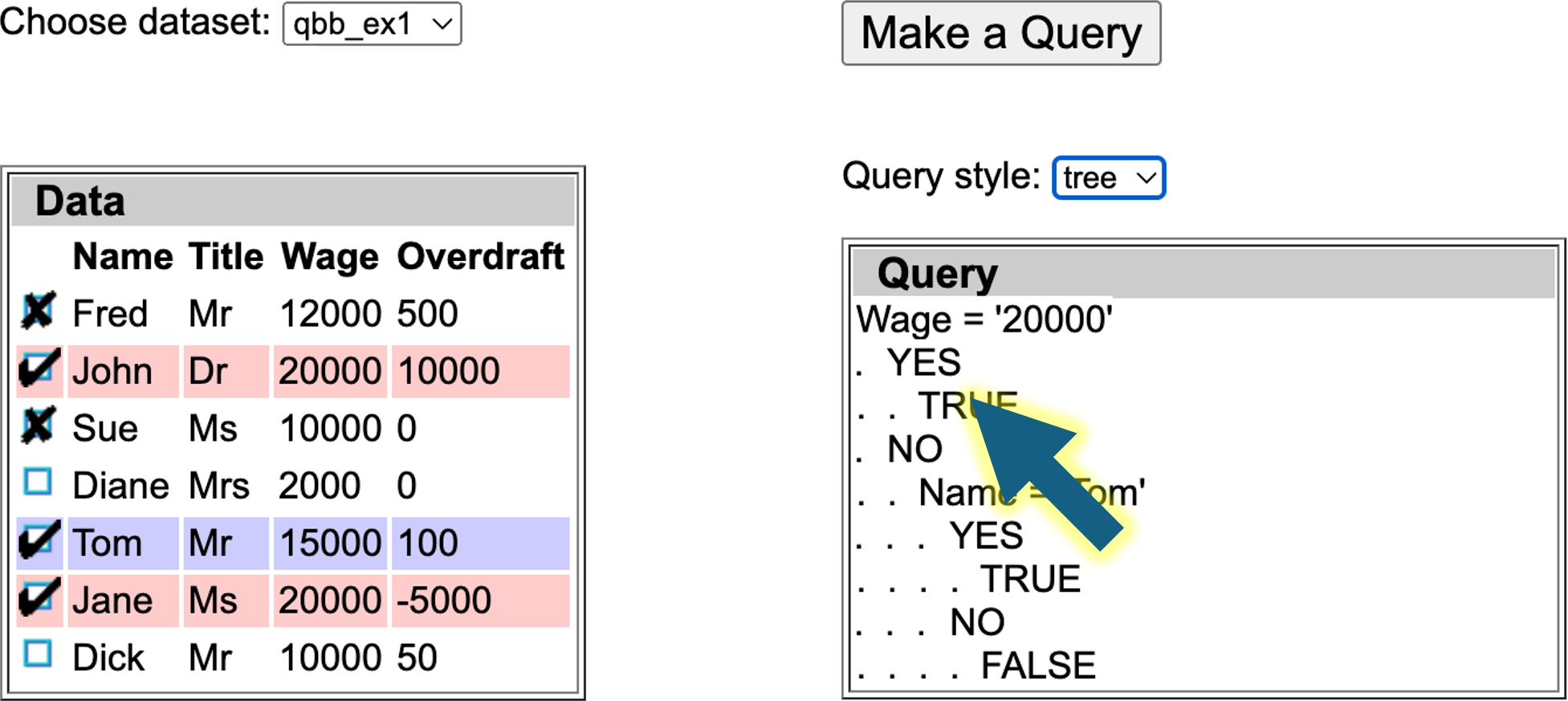

Machine learning systems and AI offer various forms of explanation for their decisions or classifications. In some cases it may be a nearby example from training data, in some cases a heat map of areas of an image that were most important in making a classification, or in others an explicit rule that applies locally (in the sense of ‘nearly the same data). The way these are framed initially is very formal, although they may be expressed in more humanly understandable visualisations.

Crucially, because these start in the computer, most can be checked or even executed (in the case of rules) by the computer. This offers several possible strategies for ensuring future consistency or at least dealing with inconsistency … all very like human ones.

- highlight inconsistency with previous explanations: “I know I said X before, but this is a different kind of situation”

- explain inconsistency with previous explanations: “I know I said X before, but this is different because of Y”

- constrain consistency with previous explanations by adding the previous explanation “X” as a constraint when making future decisions. This may only be possible with some kinds of machine learning algorithms.

- ensure consistency by using the previous explanation “X” as the decision rule when the current situation is sufficiently close; that is completely bypass the original AI system.

The last mimics a crucial aspect of human reasoning: by being forced to reflect on our unconscious (type 1) decisions, we create explicit understanding and then may use this in more conscious rational (type 2) decision making in the future.

Of course, strategy 3 is precisely Cheat Mastermind.