Where are the boundaries between freedom, license and exploitation, between fair use and theft?

Where are the boundaries between freedom, license and exploitation, between fair use and theft?

I found myself getting increasingly angry today as Mozilla Foundation stepped firmly beyond those limits, and moreover with Trump-esque rhetoric attempts to dupe others into following them.

It all started with a small text add below the Firefox default screen search box:

Partly because of my ignorance of web-speak ‘TFW‘ (I know showing my age!), I clicked through to a petition page on Mozilla Foundation (PDF archive copy here).

It starts off fine, with stories of some of the silliness of current copyright law across Europe (can’t share photos of the Eiffel tower at night) and problems for use in education (which does in fact have quite a lot of copyright exemptions in many countries). It offers a petition to sign.

This sounds all good, partly due to rapid change, partly due to knee jerk reactions, internet law does seem to be a bit of a mess.

If you blink you might miss one or two odd parts:

“This means that if you live in or visit a country like Italy or France, you’re not permitted to take pictures of certain buildings, cityscapes, graffiti, and art, and share them online through Instagram, Twitter, or Facebook.”

Read this carefully, a tourist forbidden from photographing cityscapes – silly! But a few words on “… and art” … So if I visit an exhibition of an artist or maybe even photographer, and share a high definition (Nokia Lumia 1020 has 40 Mega pixel camera) is that OK? Perhaps a thumbnail in the background of a selfie, but does Mozilla object to any rules to prevent copying of artworks?

However, it is at the end, in a section labelled “don’t break the internet”, the cyber fundamentalism really starts.

“A key part of what makes the internet awesome is the principle of innovation without permission — that anyone, anywhere, can create and reach an audience without anyone standing in the way.”

Again at first this sounds like a cry for self expression, except if you happen to be an artist or writer and would like to make a living from that self-expression?

Again, it is clear that current laws have not kept up with change and in areas are unreasonably restrictive. We need to be ale to distinguish between a fair reference to something and seriously infringing its IP. Likewise, we could distinguish the aspects of social media that are more like looking at holiday snaps over a coffee, compared to pirate copies for commercial profit.

However, in so many areas it is the other way round, our laws are struggling to restrict the excesses of the internet.

Just a few weeks ago a 14 year old girl was given permission to sue Facebook. Multiple times over a 2 year period nude pictures of her were posted and reposted. Facebook hides behind the argument that it is user content, it takes down the images when they are pointed out, and yet a massive technology company, which is able to recognise faces is not able to identify the same photo being repeatedly posted. Back to Mozilla: “anyone, anywhere, can create and reach an audience without anyone standing in the way” – really?

Of course this vision of the internet without boundaries is not just about self expression, but freedom of speech:

“We need to defend the principle of innovation without permission in copyright law. Abandoning it by holding platforms liable for everything that happens online would have an immense chilling effect on speech, and would take away one of the best parts of the internet — the ability to innovate and breathe new meaning into old content.”

Of course, the petition is signalling out EU law, which inconveniently includes various provisions to protect the privacy and rights of individuals, not dictatorships or centrally controlled countries.

So, who benefits from such an open and unlicensed world? Clearly not the small artist or the victim of cyber-bullying.

Laissez-faire has always been an aim for big business, but without constraint it is the law of the jungle and always ends up benefiting the powerful.

In the 19th century it was child labour in the mills only curtailed after long battles.

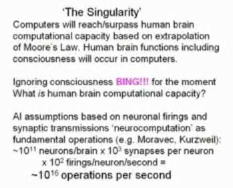

In the age of the internet, it is the vast US social media giants who hold sway, and of course the search engines, who just happen to account for $300 million of revenue for Mozilla Foundation annually, 90% of its income.

I was reading a quite disturbing article on a

I was reading a quite disturbing article on a