Names and naming have always been a big issue both in computer science and philosophy, and a topic I have posted on before (see “names – a file by any other name“).

In computer science, and in particular programming languages, a whole vocabulary has arisen to talk about names: scope, binding, referential transparency. As in philosophy, it is typically the association between a name and its ‘meaning’ that is of interest. Names and words, whether in programming languages or day-to-day language, are, what philosophers call, ‘intentional‘: they refer to something else. In computer science the ‘something else’ is typically some data or code or a placeholder/variable containing data or code, and the key question of semantics or ‘meaning’ is about how to identify which variable, function or piece of data a name refers to in a particular context at a particular time.

The emphasis in computing has tended to be about:

(a) Making sure names have unambiguous meaning when looking locally inside code. Concerns such as referential transparency, avoiding dynamic binding and the deprecation of global variables are about this.

(b) Putting boundaries on where names can be seen/understood, both as a means to ensure (a) and also as part of encapsulation of semantics in object-based languages and abstract data types.

However, there has always been a tension between clarity of intention (in both the normal and philosophical sense) and abstraction/reuse. If names are totally unambiguous then it becomes impossible to say general things. Without a level of controlled ambiguity in language a legal statement such as “if a driver exceeds the speed limit they will be fined” would need to be stated separately for every citizen. Similarly in computing when we write:

function f(x) { return (x+1)*(x-1); }

The meaning of x is different when we use it in ‘f(2)’ or ‘f(3)’ and must be so to allow ‘f’ to be used generically. Crucially there is no internal ambiguity, the two ‘x’s refer to the same thing in a particular invocation of ‘f’, but the precise meaning of ‘x’ for each invocation is achieved by external binding (the argument list ‘(2)’).

Come the web and URLs and URIs.

Fiona@lovefibre was recently making a test copy of a website built using WordPress. In a pure html website, this is easy (so long as you have used relative or site-relative links within the site), you just copy the files and put them in the new location and they work 🙂 Occasionally a more dynamic site does need to know its global name (URL), for example if you want to send a link in an email, but this can usually be achieved using configuration file. For example, there is a development version of Snip!t at cardiff.snip!t.org (rather then www.snipit.org), and there is just one configuration file that needs to be changed between this test site and the live one.

Similarly in a pristine WordPress install there is just such a configuration file and one or two database entries. However, as soon as it has been used to create a site, the database content becomes filled with URLs. Some are in clear locations, but many are embedded within HTML fields or serialised plugin options. Copying and moving the database requires a series of SQL updates with string replacements matching the old site name and replacing it with the new — both tedious and needing extreme care not to corrupt the database in the process.

Is this just a case of WordPress being poorly engineered?

In fact I feel more a problem endemic in the web and driven largely by the URL.

Recently I was experimenting with Firefox extensions. Being a good 21st century programmer I simply found an existing extension that was roughly similar to what I was after and started to alter it. First of course I changed its name and then found I needed to make changes through pretty much every file in the extension as the knowledge of the extension name seemed to permeate to the lowest level of the code. To be fair XUL has mechanisms to achieve a level of encapsulation introducing local URIs through the ‘chrome:’ naming scheme and having been through the process once. I maybe understand a bit better how to design extensions to make them less reliant on the external name, and also which names need to be changed and which are more like the ‘x’ in the ‘f(x)’ example. However, despite this, the experience was so different to the levels of encapsulation I have learnt to take for granted in traditional programming.

Much of the trouble resides with the URL. Going back to the two issues of naming, the URL focuses strongly on (a) making the name unambiguous by having a single universal namespace; URLs are a bit like saying “let’s not just refer to ‘Alan’, but ‘the person with UK National Insurance Number XXXX’ so we know precisely who we are talking about”. Of course this focus on uniqueness of naming has a consequential impact on generality and abstraction. There are many visitors on Tiree over the summer and maybe one day I meet one at the shop and then a few days later pass the same person out walking; I don’t need to know the persons NI number or URL in order to say it was the same person.

Back to Snip!t, over the summer I spent some time working on the XML-based extension mechanism. As soon as these became even slightly complex I found URLs sneaking in, just like the WordPress database 🙁 The use of namespaces in the XML file can reduce this by at least limiting full URLs to the XML header, but, still, embedded in every XML file are un-abstracted references … and my pride in keeping the test site and live site near identical was severely dented.

In the years when the web was coming into being the Hypertext community had been reflecting on more than 30 years of practical experience, embodied particularly in the Dexter Model. The Dexter model and some systems, such as Wendy Hall’s Microcosm, incorporated external linkage; that is, the body of content had marked hot spots, but the association of these hot spots to other resources was in a separate external layer.

Sadly HTML opted for internal links in anchor and image tags in order to make html files self-contained, a pattern replicated across web technologies such as XML and RDF. At a practical level this is (i) why it is hard to have a single anchor link to multiple things, as was common in early Hypertext systems such as Intermedia, and (ii), as Fiona found, a real pain for maintenance!

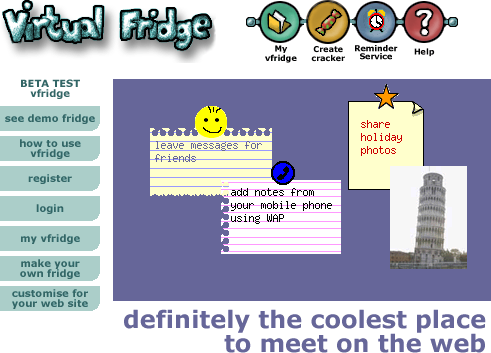

The core idea of vfridge is placing small notes, photos and ‘magnets’ in a shareable web area that can be moved around and arranged like you might with notes held by magnets to a fridge door.

The core idea of vfridge is placing small notes, photos and ‘magnets’ in a shareable web area that can be moved around and arranged like you might with notes held by magnets to a fridge door. Just over a year ago I thought it would be good to write a retrospective about vfridge in the light of the social networking revolution. We did a poster “

Just over a year ago I thought it would be good to write a retrospective about vfridge in the light of the social networking revolution. We did a poster “