Running in the early morning, the dawn sun drives a burnt orange road across the bay. The water’s margin is often the best place to tread, the sand damp and solid, sound underfoot, but unpredictable. The tide was high and at first I thought it had just turned, the damp line a full five yards beyond the edge of the current waves. Some waves pushed higher and I had to swerve and dance to avoid the frothing edge, others lower, wave following wave, but in longer cycles, some higher, some lower.

It was only later I realised the tide was still moving in, the damp line I had seen as the zenith of high tide, had merely been the high point of a cycle and I had run out during a temporary low. Cycles within cycles, the larger cycles predictable and periodic, driven by moon and sun, but the smaller ones, the waves and patterns of waves, driven by wind and distant storms thousands of miles away.

I’m reading Kate Raworth’s Doughnut Economics. She describes the way 20th century economists (and many still) were wedded to simple linear models of closed processes, hence missed the crucial complexities of an interconnected world, and so making the (predictable) crashes far worse.

I’m reading Kate Raworth’s Doughnut Economics. She describes the way 20th century economists (and many still) were wedded to simple linear models of closed processes, hence missed the crucial complexities of an interconnected world, and so making the (predictable) crashes far worse.

I was fortunate in that even in school I recall watching the BBC documentary on chaos theory and then attending an outreach lecture at Cardiff University, targeted at children, where the speaker was an expert in Chaos and Catastrophe Theory giving a more mathematical treatment. Ideas of quasi-periodicity, non-linearity, feedback, phase change, tipping points and chaotic behaviour have been part of my understanding of the world since early in my education.

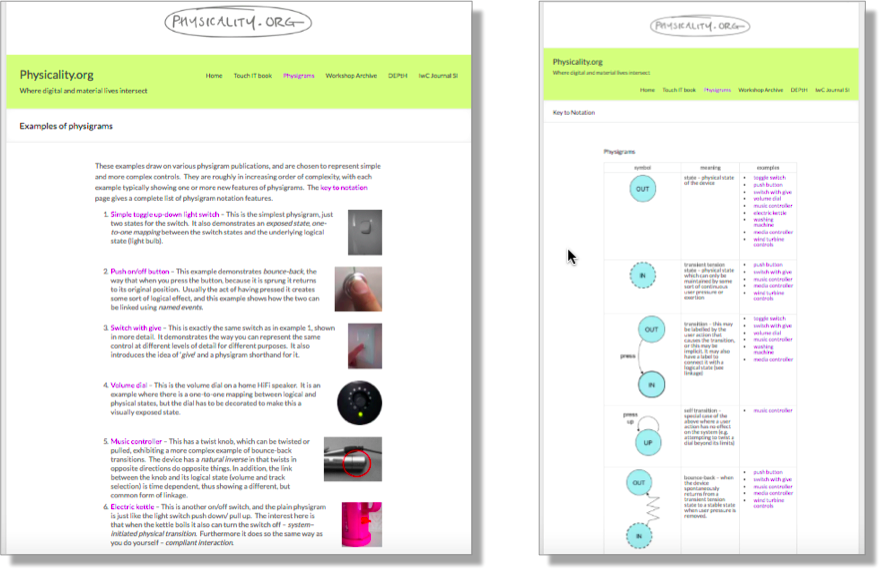

Now-a-days ideas of complexity are more common; Hollywood embraced the idea that the flutter of a butterfly wing could be the final straw that causes a hurricane. This has been helped in no small part by the high-profile of the Santa-Fe Institute and numerous popular science books. However, only recently I was with a number of academics in computing and mathematics, who had not come across ‘criticality’ as a term.

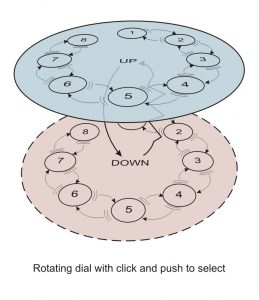

Criticality is about the way many natural phenomena self-organise to be on the edge so that small events have a large impact. The classic example is a pile of sand: initially a whole bucketful tipped on the top will just stay there, but after a point the pile gets to a particular (critical) angle, where even a single grain may cause a minor avalanche.

If we understand the world in terms of stable phenomena, where small changes cause small effects, and things that go out of kilter are brought back by counter effects, it is impossible to make sense of the wild fluctuations of global economics, political swings to extremism, and cataclysmic climate change.

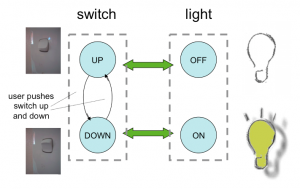

One of the things ignored by some of the most zealous proponents of complexity is that many of the phenomena that we directly observe day-to-day do in fact follow the easier laws of stability and small change. Civilisation develops in parts of the world that are relatively stable and then when we modify the world and design artefacts within it, we engineer things that are understandable and controllable, where simple rules work. There are times when we have to stare chaos in the face, but where possible it is usually best to avoid it.

However, even this is changing. The complexity of economics is due to the large-scale networks within global markets with many feedback loops, some rapid, some delayed. In modern media and more recently the internet and social media, we have amplified this further, and many of the tools of big-data analysis, not least deep neural networks, gain their power precisely because they have stepped out of the world of simple cause and effect and embrace complex and often incomprehensible interconnectivity.

The mathematical and computational analyses of these phenomena are not for the faint hearted. However, the qualitative understanding of the implications of this complexity should be part of the common vocabulary of society, essential to make sense of climate, economics and technology.

In education we often teach the things we can simply describe, that are neat and tidy, explainable, where we don’t have to say “I don’t know”. Let’s make space for piles of sand alongside pendulums in physics, screaming speaker-microphone feedback in maths, and contingency alongside teleological inevitability in historic narrative.