Nobody on the web can be unaware of the Wikipedia blackout, and if they haven’t heard of SOPA or PIPA before will have now. Few who understand the issues would deny that SOPA and PIPA are misguided and ill-informed, even Apple and other software giants abandoned it, and Obama’s recent statement has effectively scuppered SOPA in its current form. However, at the risk of apparently annoying everyone, am I the only person who finds some of the anti-SOPA rhetoric at best naive and at times simply arrogant?

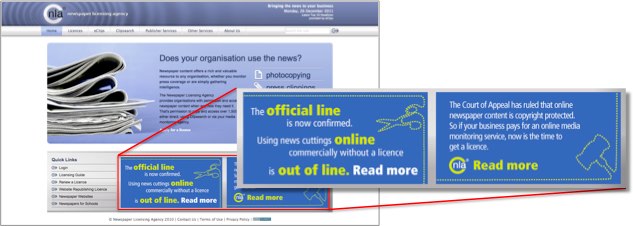

The ignorance behind SOPA and a raft of similar legislation and court cases across the world is deeply worrying. Only recently I posted about the recent NLA case in the UK, that creates potential copyright issues when linking on the web reminiscent of the Shetland Times case nearly 15 years ago.

However, that is no excuse for blinkered views on the other side.

I got particularly fed up a few days ago reading an article “Lockdown: The coming war on general-purpose computing”1 by copyright ativist Cory Doctorow based on a keynote he gave at the Chaos Computer Congress. The argument was that attempts to limit the internet destroyed the very essence of the computer as a general purpose device and were therefore fundamentally wrong. I know that Sweden has just recognised Kopimism as a religion, but still an argument that relies on the inviolate nature of computation leaves one wondering.

The article also argued that elected members of Parliament and Congress are by their nature layfolk, and so quite reasonably not expert in every area:

“And yet those people who are experts in policy and politics, not technical disciplines, still manage to pass good rules that make sense.“

Doctorow has trust in the nature of elected democracy for every area from biochemistry to urban planning, but not information technology, which, he asserts, is in some sense special.

Now even as a computer person I find this hard to swallow, but what would a geneticist, physicist, or even a financier using the Black-Scholes model make of this?

Furthermore, Congress is chastised for finding unemployment more important than copyright, and the UN for giving first regard to health and economics — of course, any reasonable person is expected to understand this is utter foolishness. From what parallel universe does this kind of thinking emerge?

Of course, Doctorow takes an extreme position, but the Electronic Freedom Foundation’s position statement, which Wikipedia points to, offers no alternative proposals and employs scaremongering arguments more reminiscent of the tabloid press, in particular the claim that:

“venture capitalists have said en masse they won’t invest in online startups if PIPA and SOPA pass“

This turns out to be a Google sponsored report2 and refers to “digital content intermediaries (DCIs)“, those “search, hosting, and distribution services for digital content“, not startups in general.

When this is the quality of argument being mustered against SOPA and PIPA is there any wonder that Congress is influenced more by the barons of the entertainment industry?

Obviously some, such as Doctorow and more fundamental anti-copyright activists, would wish to see a completely unregulated net. Indeed, this is starting to be the case de facto in some areas, where covers are distributed pretty freely on YouTube without apparently leading to a collapse in the music industry, and offering new bands much easier ways to make an initial name for themselves. Maybe in 20 years time Hollywood will have withered and we will live off a diet of YouTube videos :-/

I suspect most of those opposing SOPA and PIPA do not share this vision, indeed Google has been paying 1/2 million per patent in recent acquisitions!

I guess the idealist position sees a world of individual freedom, but it is not clear that is where things are heading. In many areas online distribution has already resulted in a shift of power from the traditional producers, the different record companies and book publishers (often relatively large companies themselves), to often one mega-corporation in each sector: Amazon, Apple iTunes. For the latter this was in no small part driven by the need for the music industry to react to widespread filesharing. To be honest, however bad the legislation, I would rather trust myself to elected representatives, than unaccountable multinational corporations3.

If we do not wish to see poor legislation passed we need to offer better alternatives, both in terms of the law of the net and how we reward and fund the creative industries. Maybe the BBC model is best, high quality entertainment funded by the public purse and then distributed freely. However, I don’t see the US Congress nationalising Hollywood in the near future.

Of course copyright and IP is only part of a bigger picture where the net is challenging traditional notions of national borders and sovereignty. In the UK we have seen recent cases where Twitter was used to undermine court injunctions. The injunctions were in place to protect a few celebrities, so were ‘fair game’ anyway, and so elicited little public sympathy. However, the Leveson Inquiry has heard evidence from the editor of the Express defending his paper’s suggestion that the McCann’s may have killed their own daughter; we expect and enforce (the Expresss paid £500,000 after a libel case) standards in the print media, would we expect less if the Express hosted a parallel new website in the Cayman Islands?

Whether it is privacy, malware or child pornography, we do expect and need to think of ways to limit the excess of the web whilst preserving its strengths. Maybe the solution is more international agreements, hopefull not yet more extra-terratorial laws from the US4.

Could this day without Wikipedia be not just a call to protest, but also an opportunity to envision what a better future might be.

- blanked out today, see Google cache[back]

- By Booz&Co, which I thought at first was a wind-up, but appears to be a real company![back]

- As I write this, I am reminded of the corporation-controlled world of Rollerball and other dystopian SciFi.[back]

- How come there is more protest over plans to shut out overseas web sites than there is over unmanned drones performing extra-judicial executions each week.[back]